category

在这个故事中,我们将探讨如何创建一个简单的基于web的聊天应用程序,该应用程序与私有REST API通信,使用OpenAI功能和会话内存。

我们将再次使用LangChain框架,该框架为与大型语言模型(LLM)的交互提供了良好的基础设施。

我们将在本文中描述的Agent将使用以下工具:

- Wikipedia with LangChain’s WikipediaAPIWrapper

- DuckDuckGo Search with LangChain’s DuckDuckGoSearchAPIWrapper

- Pubmed with PubMedAPIWrapper

- LLM Math Chain with LLMMathChain

- Events API with a custom implementation which we describe later.

智能体将有两个用户界面:

- 基于Streamlight的tweb客户端

- 命令行界面

OpenAI函数

当处理来自像ChatGPT这样的LLM的响应时,主要问题之一是响应不是完全可预测的。当您试图解析响应时,输出中可能会有细微的变化,这使得编程工具的解析容易出错。由于LangChain代理将用户输入发送到LLM,并期望它将输出路由到特定的工具(或函数),因此代理需要能够解析可预测的输出。

为了解决这个问题,OpenAI于2023年6月13日推出了“函数调用”,它允许开发人员描述JSON输出,描述基于特定输入调用哪些函数(用LangChain的说法:工具)。

LangChain代理最初使用的方法(例如ZERO_SHOT_REACT_DESCRIPTION代理)使用提示工程将消息路由到正确的工具。这种方法可能不如使用OpenAI函数的方法准确和缓慢。

截至撰写本博客时,支持此功能的型号有:

- gpt-4–0613

- gpt-3.5-turbo-0613(包括gpt-3.5-turbo-16k-0613,我们用于您的游乐场聊天代理)

以下是OpenAI给出的关于函数调用工作原理的一些示例:

Convert queries such as “Email Anya to see if she wants to get coffee

next Friday” to a function call like send_email(to: string, body: string),

or “What’s the weather like in Boston?” to get_current_weather

(location: string, unit: 'celsius' | 'fahrenheit')在内部,有关函数及其参数的指令被注入到系统消息中。

API端点为:

POST https://api.openai.com/v1/chat/completions

您可以在这里找到低级别的API详细信息:

https://api.openai.com/v1/chat/completions

Agent循环

就博客作者所见,代理循环与大多数代理相同。基本代理代码类似于ZERO_SHOT_REACT_DESCRIPTION代理。因此,代理循环仍然可以用以下图表来描述:

自定义和LangChain工具

LangChain代理使用工具(对应于OpenAPI函数)。LangChain(0.0.220版)开箱即用,提供了大量工具,允许您连接到各种付费和免费服务或互动,例如:

- arxiv (free)

- azure_cognitive_services

- bing_search

- brave_search

- ddg_search

- file_management

- gmail

- google_places

- google_search

- google_serper

- graphql

- human interaction

- jira

- json

- metaphor_search

- office365

- openapi

- openweathermap

- playwright

- powerbi

- pubmed

- python

- requests

- scenexplain

- searx_search

- shell

- sleep

- spark_sql

- sql_database

- steamship_image_generation

- vectorstore

- wikipedia (free)

- wolfram_alpha

- youtube

- zapier

我们将在这个博客中展示如何创建一个自定义工具来访问自定义REST API。

会话记忆

当你想记住以前输入的项目时,这种类型的内存就派上了用场。例如:如果你问“阿尔伯特·爱因斯坦是谁?”,然后问“他的导师是谁。

这是LangChain关于Memory的文档。

具有功能、自定义工具和内存的Agent

我们的代理可以在Git存储库中找到:

https://github.com/gilfernandes/chat_functions.git

为了让它运行,请先安装Conda。

然后创建以下环境并安装以下库:

conda activate langchain_streamlit

pip install langchain

pip install prompt_toolkit

pip install wikipedia

pip install arxiv

pip install python-dotenv

pip install streamlit

pip install openai

pip install duckduckgo-search

然后创建一个包含以下内容的.env文件:

OPENAI_API_KEY=<key>

然后,您可以使用以下命令运行代理的命令行版本:

python .\agent_cli.py

Streamlight版本可以在端口8080上使用此命令运行:

streamlit run ./agent_streamlit.py --server.port 8080

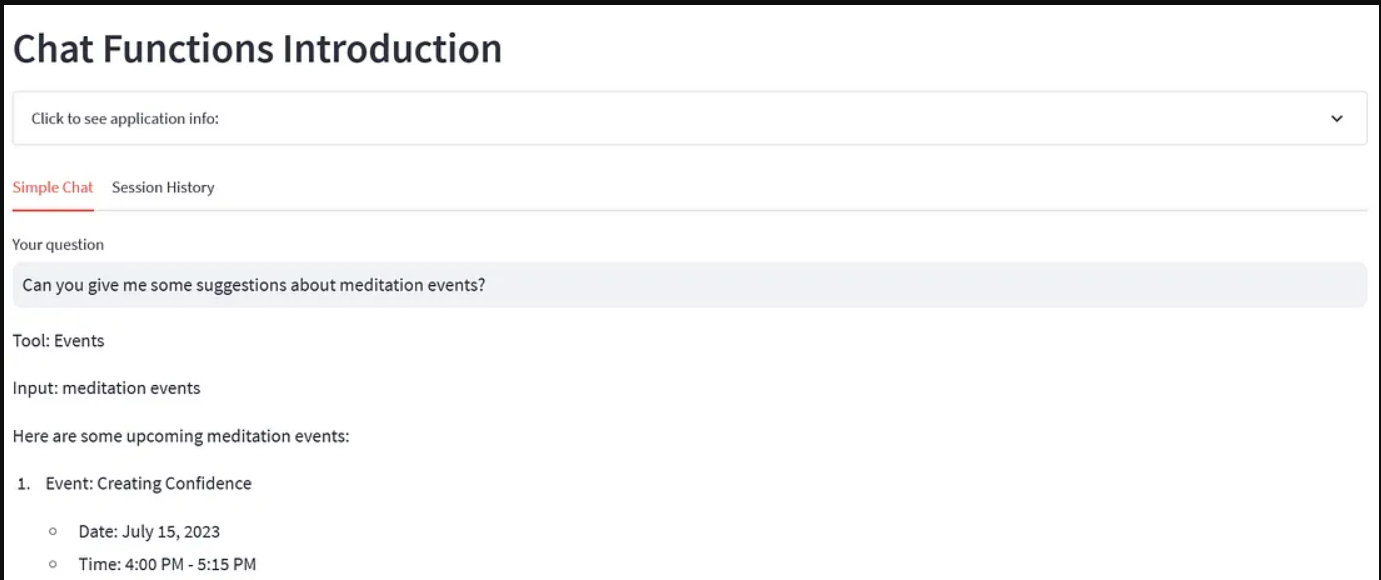

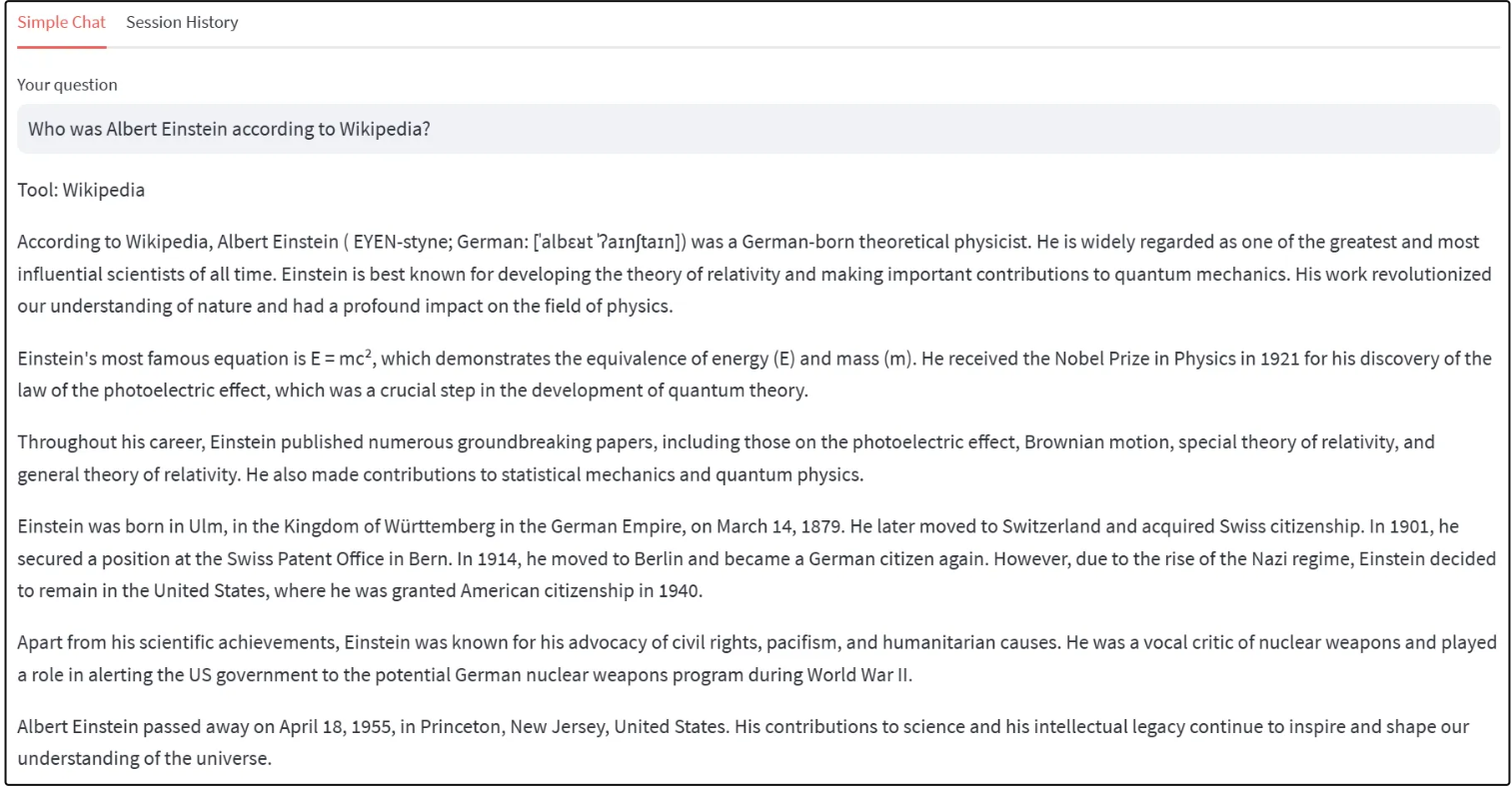

以下是该工具的一些屏幕截图:

UI显示正在使用的工具(OpenAI功能)以及向其发送的输入。

Using the calculator

Using the calculator

使用维基百科

代码详细信息

该代码包括以下主要部分:

代理安装程序

在以下代码中,您必须设置具有工具内存的代理:

def setup_agent() -> AgentExecutor:

"""

Sets up the tools for a function based chain.

We have here the following tools:

- wikipedia

- duduckgo

- calculator

- arxiv

- events (a custom tool)

- pubmed

"""

cfg = Config()

duckduck_search = DuckDuckGoSearchAPIWrapper()

wikipedia = WikipediaAPIWrapper()

pubmed = PubMedAPIWrapper()

events = tools_wrappers.EventsAPIWrapper()

events.doc_content_chars_max=5000

llm_math_chain = LLMMathChain.from_llm(llm=cfg.llm, verbose=False)

arxiv = ArxivAPIWrapper()

tools = [

Tool(

name = "Search",

func=duckduck_search.run,

description="useful for when you need to answer

questions about current events. You should ask

targeted questions"

),

Tool(

name="Calculator",

func=llm_math_chain.run,

description="useful for when you need to answer

questions about math"

),

Tool(

name="Wikipedia",

func=wikipedia.run,

description="useful when you need an answer

about encyclopedic general knowledge"

),

Tool(

name="Arxiv",

func=arxiv.run,

description="useful when you need an answer about

encyclopedic general knowledge"

),

# This is the custom tool. Note that the OpenAPI Function

parameters are inferred via analysis of the `events.run`` method

StructuredTool.from_function(

func=events.run,

name="Events",

description="useful when you need an answer about

meditation related events in the united kingdom"

),

StructuredTool.from_function(

func=pubmed.run,

name='PubMed',

description='Useful tool for querying medical

publications'

)

]

agent_kwargs, memory = setup_memory()

return initialize_agent(

tools,

cfg.llm,

agent=AgentType.OPENAI_FUNCTIONS,

verbose=False,

agent_kwargs=agent_kwargs,

memory=memory

)

在该功能中,设置内存:

def setup_memory() -> Tuple[Dict, ConversationBufferMemory]:

"""

Sets up memory for the open ai functions agent.

:return a tuple with the agent keyword pairs and the

conversation memory.

"""

agent_kwargs = {

"extra_prompt_messages": [MessagesPlaceholder(variable_name="memory")],

}

memory = ConversationBufferMemory(memory_key="memory",

return_messages=True)

return agent_kwargs, memory

这就是代理的模式:

class Config():

"""

Contains the configuration of the LLM.

"""

model = 'gpt-3.5-turbo-16k-0613'

llm = ChatOpenAI(temperature=0, model=model)

这是一个自定义工具,它调用一个简单的REST API:

import requests

import urllib.parse

from typing import Dict, Optional

from pydantic import BaseModel, Extra

class EventsAPIWrapper(BaseModel):

"""Wrapper around a custom API used to fetch public

event information.

There is no need to install any package to get this to work.

"""

offset: int = 0

limit: int = 10

filter_by_country: str = "United Kingdom"

doc_content_chars_max: int = 4000

class Config:

"""Configuration for this pydantic object."""

extra = Extra.forbid

def run(self, query: str) -> str:

"""Run Events search and get page summaries."""

encoded_query = urllib.parse.quote_plus(query)

encoded_filter_by_country = urllib.parse.quote_plus(self.filter_by_country)

response = requests.get(f"https://events.brahmakumaris.

org/events-rest/event-search-v2?search={encoded_query}" +

f"&limit=10&offset={self.offset}

&filterByCountry={encoded_filter_by_country}&includeDescription=true")

if response.status_code >= 200 and response.

status_code < 300:

json = response.json()

summaries = [self._formatted_event_summary(e) for e in json['events']]

return "\n\n".join(summaries)[: self.doc_content_chars_max]

else:

return f"Failed to call events API with status code {response.status_code}"

@staticmethod

def _formatted_event_summary(event: Dict) -> Optional[str]:

return (f"Event: {event['name']}\n" +

f"Start: {event['startDate']} {event['startTime']}\n" +

f"End: {event['endDate']} {event['endTime']}\n" +

f"Venue: {event['venueAddress']}

{event['postalCode']{event['locality']}

{event['countryName']}\n" +

f"Event Description: {event['description']}\n" +

f"Event URL: https://brahmakumaris.uk/

event/?id={event['id']}\n"

)

Agent CLI循环

agent CLI循环使用一个简单的while循环:

from prompt_toolkit import HTML, prompt, PromptSession

from prompt_toolkit.history import FileHistory

from langchain.input import get_colored_text

from dotenv import load_dotenv

from langchain.agents import AgentExecutor

import langchain

from callbacks import AgentCallbackHandler

load_dotenv()

from chain_setup import setup_agent

langchain.debug = True

if __name__ == "__main__":

agent_executor: AgentExecutor = setup_agent()

session = PromptSession(history=FileHistory(".agent-history-file"))

while True:

question = session.prompt(

HTML("<b>Type <u>Your question</u></b> ('q' to exit): ")

)

if question.lower() == 'q':

break

if len(question) == 0:

continue

try:

print(get_colored_text("Response: >>> ", "green"))

print(get_colored_text(agent_executor.run

(question, callbacks=[AgentCallbackHandler()]), "green"))

except Exception as e:

print(get_colored_text(f"Failed to process

{question}", "red"))

print(get_colored_text(f"Error {e}", "red"))

Streamlit API应用程序

这是Streamlight应用程序,用于渲染上面显示的UI:

import streamlit as st

from dotenv import load_dotenv

from langchain.agents import AgentExecutor

import callbacks

load_dotenv()

from chain_setup import setup_agent

QUESTION_HISTORY: str = 'question_history'

def init_stream_lit():

title = "Chat Functions Introduction"

st.set_page_config(page_title=title, layout="wide")

agent_executor: AgentExecutor = prepare_agent()

st.header(title)

if QUESTION_HISTORY not in st.session_state:

st.session_state[QUESTION_HISTORY] = []

intro_text()

simple_chat_tab, historical_tab = st.tabs

(["Simple Chat", "Session History"])

with simple_chat_tab:

user_question = st.text_input("Your question")

with st.spinner('Please wait ...'):

try:

response = agent_executor.run

(user_question, callbacks=[callbacks.StreamlitCallbackHandler(st)])

st.write(f"{response}")

st.session_state[QUESTION_HISTORY].

append((user_question, response))

except Exception as e:

st.error(f"Error occurred: {e}")

with historical_tab:

for q in st.session_state[QUESTION_HISTORY]:

question = q[0]

if len(question) > 0:

st.write(f"Q: {question}")

st.write(f"A: {q[1]}")

def intro_text():

with st.expander("Click to see application info:"):

st.write(f"""Ask questions about:

- [Wikipedia](https://www.wikipedia.org/) Content

- Scientific publications ([pubmed](https://pubmed.

ncbi.nlm.nih.gov) and [arxiv](https://arxiv.org))

- Mathematical calculations

- Search engine content ([DuckDuckGo](https://duckduckgo.com/))

- Meditation related events (Custom Tool)

""")

@st.cache_resource()

def prepare_agent() -> AgentExecutor:

return setup_agent()

if __name__ == "__main__":

init_stream_lit()

总结

OpenAI功能现在可以很容易地与LangChain一起使用。与基于提示的方法相比,这似乎是一种更好的创建代理的方法(更快、更准确)。

- 登录 发表评论

- 33 次浏览