category

Help bring everyone into the conversation using speech to text with Azure AI Speech.

This article will describe the architecture used in this sample on GitHub. Keep in mind that the code from this reference architecture is only an example for guidance and there may be places to optimize the code before using in a production environment.

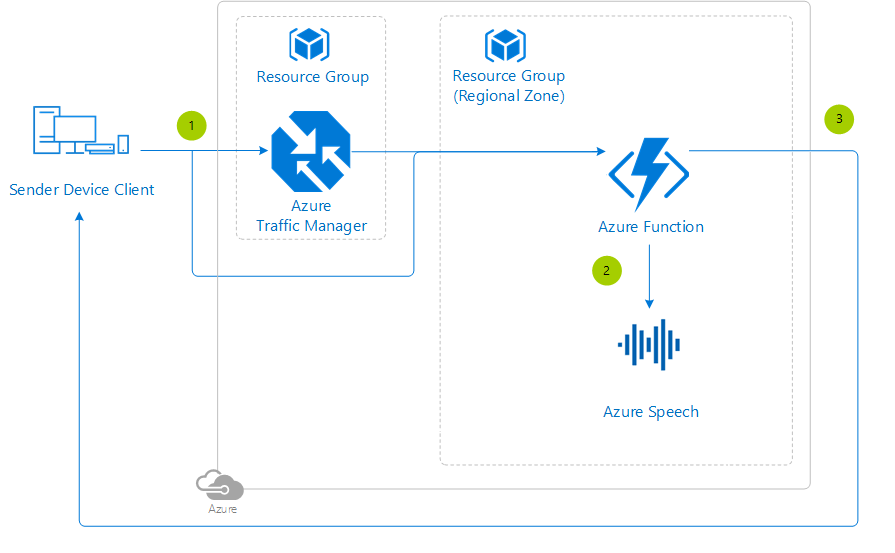

Architecture Diagram

Architecture Services

- Azure Functions - Simplest way to run code on-demand in the cloud.

- Azure AI Speech - The service that provides the speech to text functionality.

Architecture Considerations

When enabling this functionality in your game, keep in mind the following variables:

- Languages supported - For a complete list of languages, see the language support topic.

- Regions supported - For information about regional availability, see the regions topic.

- Audio inputs - The Speech service supports 16-bit, 16-KHz mono PCM audio by default.

Deployment Template

Click the following button to deploy the project to your Azure subscription:

This operation will trigger a template deployment of the azuredeploy.json ARM template file to your Azure subscription, which will create the necessary Azure resources. This may induce charges in your Azure account.

Note

If you're interested in how the ARM template works, review the Azure Resource Manager template documentation from each of the different services leveraged in this reference architecture:

There are two types of Azure AI services subscriptions. The first is a subscription to a single service, such as Azure AI Vision or Azure AI Speech. A single-service subscription is restricted to just that service. The second type is a multi-service subscription. This allows you to use a single subscription for multiple Azure AI services. This option also consolidates billing. To make this reference architecture as modular as possible, the Azure AI services are each setup as a single service.

Finally, add this Function application setting so the sample project can connect to the Azure services:

- SPEECH_KEY - The access key for the Azure AI Speech resource that was created.

Tip

To run the Azure Functions locally, update the local.settings.json file with these same app setting.

Step by Step

- The player's device sends a request to the Azure Function with the language specified in the header and the speech audio in the body.

- The Azure Function then uses that information to submit a request to start the conversion to text. The Azure AI Speech service's response body is the text translated from the audio file.

- The Azure Function then responds to the player's request with the translation.

Azure AI Speech samples

You can find speech to text samples at the Azure AI Speech SDK GitHub repository.

Security Considerations

Do not hard-code any Azure AI services connection strings into the source of the Function. Instead, at a minimum, leverage the Function App Settings or, for even stronger security, use Key Vault instead. There is a tutorial explaining how to create a Key Vault, how to use a managed service identity with a Function and finally how to read the secret stored in Key Vault from a Function.

Pricing

If you don't have an Azure subscription, create a free account to get started with 12 months of free services. You're not charged for services included for free with Azure free account, unless you exceed the limits of these services. Learn how to check usage through the Azure Portal or through the usage file.

You are responsible for the cost of the Azure services used while running these reference architectures. The total amount will vary based on usage. See the pricing webpages for each of the services that were used in the reference architecture:

You can also use the Azure pricing calculator to configure and estimate the costs for the Azure services that you are planning to use.

- 登录 发表评论

- 16 次浏览

最新内容

- 1 month ago

- 1 month ago

- 1 month 3 weeks ago

- 1 month 3 weeks ago

- 1 month 3 weeks ago

- 1 month 3 weeks ago

- 1 month 3 weeks ago

- 1 month 3 weeks ago

- 1 month 3 weeks ago

- 1 month 3 weeks ago