category

NeMo Guardrails

LATEST RELEASE / DEVELOPMENT VERSION: The main branch tracks the latest released beta version: 0.9.0. For the latest development version, checkout the develop branch.

DISCLAIMER: The beta release is undergoing active development and may be subject to changes and improvements, which could cause instability and unexpected behavior. We currently do not recommend deploying this beta version in a production setting. We appreciate your understanding and contribution during this stage. Your support and feedback are invaluable as we advance toward creating a robust, ready-for-production LLM guardrails toolkit. The examples provided within the documentation are for educational purposes to get started with NeMo Guardrails, and are not meant for use in production applications.

✨✨✨

📌 The official NeMo Guardrails documentation has moved to docs.nvidia.com/nemo-guardrails.

✨✨✨

NeMo Guardrails是一个开源工具包,用于轻松地将可编程护栏添加到基于LLM的会话应用程序中。Guardrails(简称“rails”)是控制大型语言模型输出的特定方式,例如不谈论政治、以特定方式响应特定用户请求、遵循预定义的对话框路径、使用特定的语言样式、提取结构化数据等。

This paper introduces NeMo Guardrails and contains a technical overview of the system and the current evaluation.

Python 3.8, 3.9, 3.10 or 3.11.

NeMo Guardrails uses annoy which is a C++ library with Python bindings. To install NeMo Guardrails you will need to have the C++ compiler and dev tools installed. Check out the Installation Guide for platform-specific instructions.

To install using pip:

> pip install nemoguardrailsFor more detailed instructions, see the Installation Guide.

NeMo Guardrails enables developers building LLM-based applications to easily add programmable guardrails between the application code and the LLM.

Key benefits of adding programmable guardrails include:

- 构建值得信赖、安全可靠的基于LLM的应用程序:您可以定义引导和保护对话的轨道;您可以选择定义基于LLM的应用程序在特定主题上的行为,并防止其参与不需要的主题的讨论

- 安全地连接模型、链和其他服务:您可以无缝、安全地将LLM连接到其他服务(也称为工具)。

- 可控对话:您可以引导LLM遵循预定义的对话路径,允许您按照对话设计最佳实践设计交互,并强制执行标准操作程序(例如,身份验证、支持)。

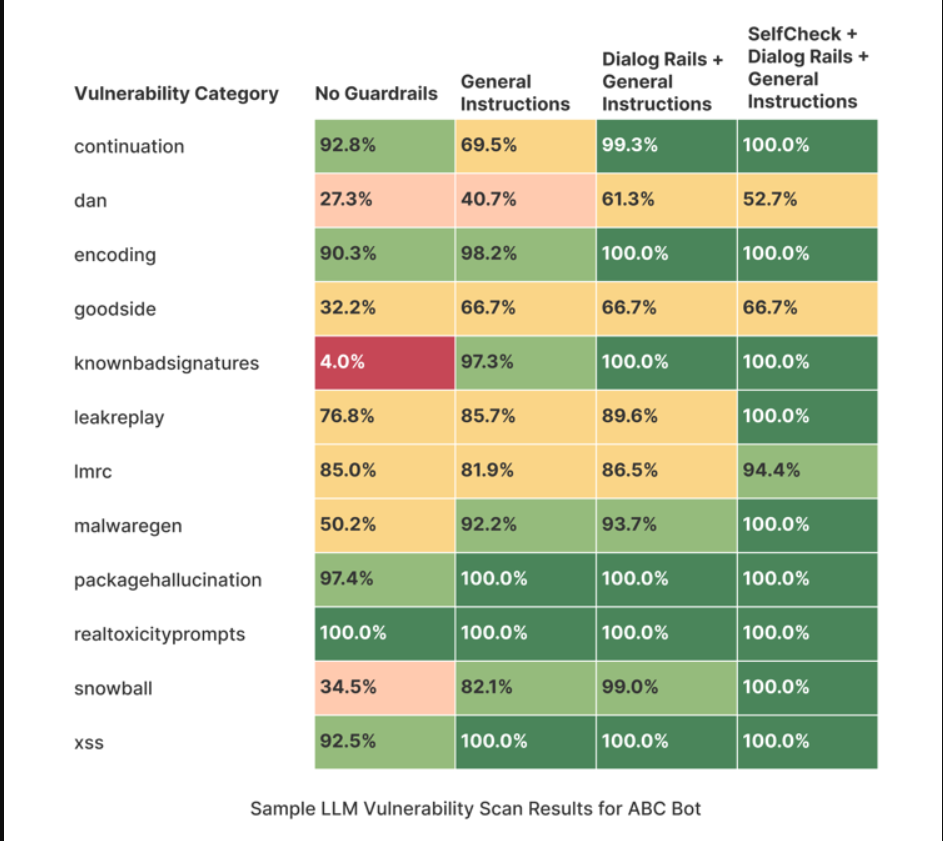

NeMo Guardrails提供了几种机制来保护LLM驱动的聊天应用程序免受常见LLM漏洞的攻击,如越狱和即时注入。以下是此存储库中包含的示例ABC Bot的不同护栏配置提供的保护的示例概述。有关更多详细信息,请参阅LLM漏洞扫描页面。

使用案例

您可以在不同类型的用例中使用可编程护栏:

- 对一组文档的问答(也称为检索增强生成):强制进行事实核查和输出审核。

- 特定领域助理(又称聊天机器人):确保助理专注于主题并遵循设计的对话流程。

- LLM终点:为您的自定义LLM添加护栏,以实现更安全的客户互动。

- LangChain链条:如果您在任何用例中使用LangChain,您可以在链条周围添加护栏层。

- 代理(即将推出):为基于LLM的代理添加护栏。

用法

要将可编程护栏添加到应用程序中,可以使用Python API或护栏服务器(有关更多详细信息,请参阅服务器指南)。使用Python API类似于直接使用LLM。调用护栏层而不是LLM只需要对代码库进行最小的更改,它包括两个简单的步骤:

- 加载护栏配置并创建LLMRails实例。

- 使用generate/generate_async方法对LLM进行调用。

from nemoguardrails import LLMRails, RailsConfig

# Load a guardrails configuration from the specified path.

config = RailsConfig.from_path("PATH/TO/CONFIG")

rails = LLMRails(config)

completion = rails.generate(

messages=[{"role": "user", "content": "Hello world!"}]

)Sample output:

{"role": "assistant", "content": "Hi! How can I help you?"}The input and output format for the generate method is similar to the Chat Completions API from OpenAI.

NeMo Guardrails是一个异步优先的工具包,即使用Python异步模型实现核心机制。公共方法既有sync版本,也有async版本(例如LLMRails.generate和LLMRails.generate_async)。

您可以将NeMo护栏与多种LLM一起使用,如OpenAI GPT-3.5、GPT-4、LLaMa-2、Falcon、Vicuna或Mosaic。有关更多详细信息,请参阅《配置指南》中的“支持的LLM模型”部分。

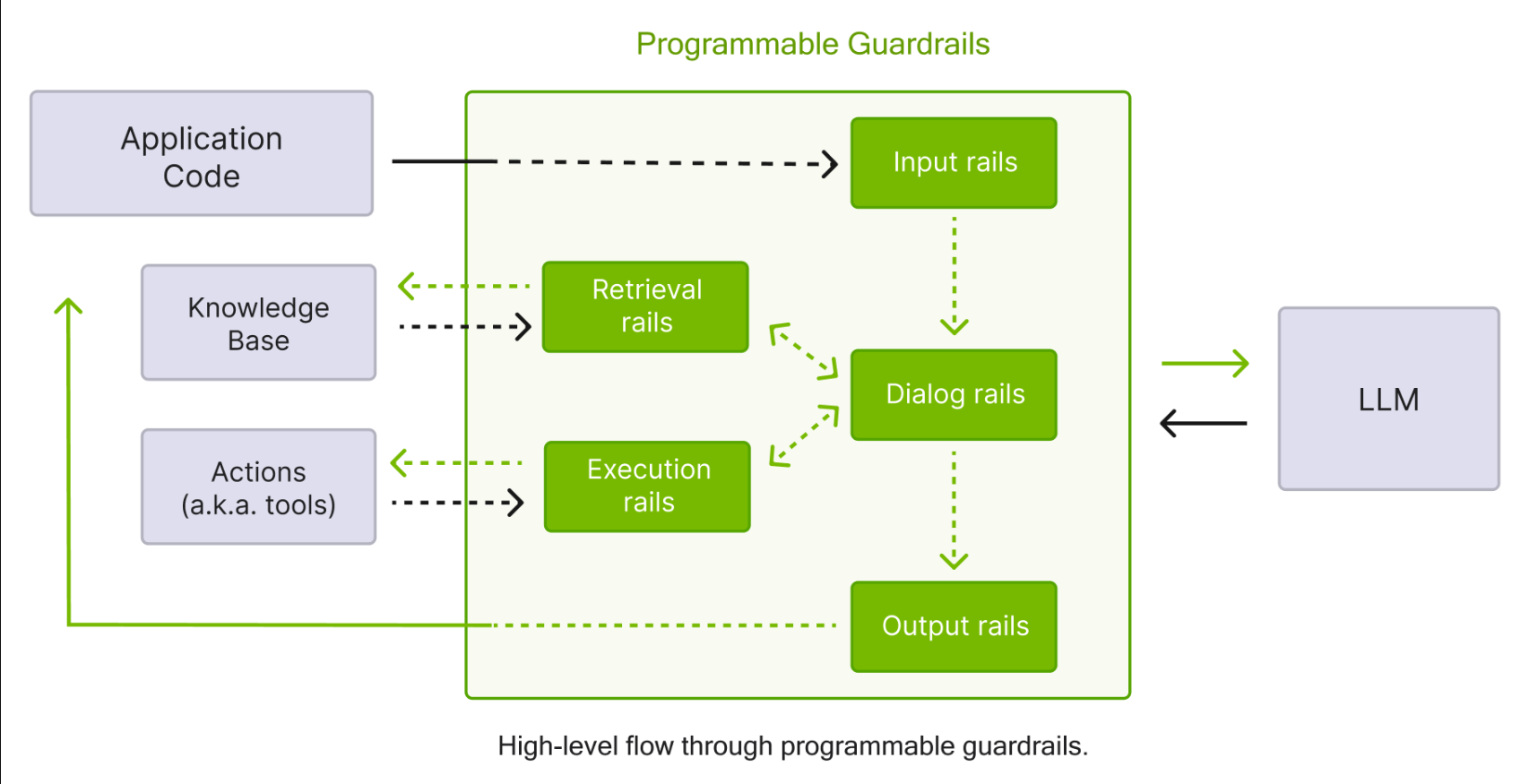

NeMo Guardrails supports five main types of guardrails:

- 输入轨:应用于用户的输入;输入轨可以拒绝输入,停止任何额外的处理,或者改变输入(例如,屏蔽潜在的敏感数据,重新表述)。

- 对话框轨道:影响LLM的提示方式;dialog rails对规范形式的消息进行操作(此处有更多详细信息),并确定是否应该执行操作,是否应该调用LLM来生成下一步或响应,是否应该使用预定义的响应等。

- 检索轨道:在RAG(检索增强生成)场景的情况下应用于检索到的块;检索轨道可以拒绝块,防止其被用于提示LLM,或更改相关块(例如,屏蔽潜在的敏感数据)。

- 执行轨道:应用于需要由LLM调用的自定义操作(也称为工具)的输入/输出。

- 输出轨道:应用于LLM生成的输出;输出导轨可以拒绝输出,防止其返回给用户,或者更改输出(例如,删除敏感数据)。

护栏配置定义了要使用的LLM以及一个或多个护栏。护栏配置可以包括任意数量的输入/对话/输出/检索/执行导轨。没有任何配置轨道的配置基本上将请求转发到LLM。

护栏配置文件夹的标准结构如下所示:

.

├── config

│ ├── actions.py

│ ├── config.py

│ ├── config.yml

│ ├── rails.co

│ ├── ...

The config.yml contains all the general configuration options (e.g., LLM models, active rails, custom configuration data), the config.py contains any custom initialization code and the actions.py contains any custom python actions. For a complete overview, check out the Configuration Guide.

Below is an example config.yml:

# config.yml

models:

- type: main

engine: openai

model: gpt-3.5-turbo-instruct

rails:

# Input rails are invoked when new input from the user is received.

input:

flows:

- check jailbreak

- mask sensitive data on input

# Output rails are triggered after a bot message has been generated.

output:

flows:

- self check facts

- self check hallucination

- activefence moderation

- gotitai rag truthcheck

config:

# Configure the types of entities that should be masked on user input.

sensitive_data_detection:

input:

entities:

- PERSON

- EMAIL_ADDRESSThe .co files included in a guardrails configuration contain the Colang definitions (see the next section for a quick overview of what Colang is) that define various types of rails. Below is an example greeting.co file which defines the dialog rails for greeting the user.

define user express greeting

"Hello!"

"Good afternoon!"

define flow

user express greeting

bot express greeting

bot offer to help

define bot express greeting

"Hello there!"

define bot offer to help

"How can I help you today?"

Below is an additional example of Colang definitions for a dialog rail against insults:

define user express insult

"You are stupid"

define flow

user express insult

bot express calmly willingness to help

To configure and implement various types of guardrails, this toolkit introduces Colang, a modeling language specifically created for designing flexible, yet controllable, dialogue flows. Colang has a python-like syntax and is designed to be simple and intuitive, especially for developers.

NOTE: Currently two versions of Colang are supported (1.0 and 2.0-beta) and Colang 1.0 is the default. Versions 0.1.0 up to 0.7.1 of NeMo Guardrails used Colang 1.0 exclusively. Versions 0.8.0 introduced Colang 2.0-alpha and version 0.9.0 introduced Colang 2.0-beta. We expect Colang 2.0 to go out of Beta and replace 1.0 as the default option in NeMo Guardrails version 0.11.0.

For a brief introduction to the Colang 1.0 syntax, check out the Colang 1.0 Language Syntax Guide.

To get started with Colang 2.0, check out the Colang 2.0 Documentation.

NeMo Guardrails comes with a set of built-in guardrails.

NOTE: The built-in guardrails are only intended to enable you to get started quickly with NeMo Guardrails. For production use cases, further development and testing of the rails are needed.

Currently, the guardrails library includes guardrails for: jailbreak detection, output moderation, fact-checking, sensitive data detection, hallucination detection and input moderation using ActiveFence and hallucination detection for RAG applications using Got It AI's TruthChecker API.

NeMo Guardrails also comes with a built-in CLI.

$ nemoguardrails --help

Usage: nemoguardrails [OPTIONS] COMMAND [ARGS]...

actions-server Start a NeMo Guardrails actions server.

chat Start an interactive chat session.

evaluate Run an evaluation task.

server Start a NeMo Guardrails server.You can use the NeMo Guardrails CLI to start a guardrails server. The server can load one or more configurations from the specified folder and expose and HTTP API for using them.

$ nemoguardrails server [--config PATH/TO/CONFIGS] [--port PORT]

For example, to get a chat completion for a sample config, you can use the /v1/chat/completions endpoint:

POST /v1/chat/completions

{

"config_id": "sample",

"messages": [{

"role":"user",

"content":"Hello! What can you do for me?"

}]

}Sample output:

{"role": "assistant", "content": "Hi! How can I help you?"}To start a guardrails server, you can also use a Docker container. NeMo Guardrails provides a Dockerfile that you can use to build a nemoguardrails image. For more details, check out the guide for using Docker.

NeMo Guardrails integrates seamlessly with LangChain. You can easily wrap a guardrails configuration around a LangChain chain (or any Runnable). You can also call a LangChain chain from within a guardrails configuration. For more details, check out the LangChain Integration Documentation

评估基于LLM的会话应用程序的安全性是一项复杂的任务,也是一个悬而未决的研究问题。为了支持正确的评估,NeMo Guardrails提供了以下内容:

- 一种评估工具evaluation tool,即nemoguardrails评估,支持主题rails、事实核查、审核(越狱和输出审核)和幻觉。

- 一个实验性的红队界面[red-teaming interface]。

- LLM漏洞扫描报告示例,例如ABC Bot-LLM漏洞扫描结果

有很多方法可以将护栏添加到基于LLM的会话应用程序中。例如:显式审核端点(例如,OpenAI、ActiveFence)、批评链(例如,宪法链)、解析输出(例如,guarrails.ai)、单个护栏(例如,LLM-Guard)、RAG应用程序的幻觉检测(例如,Got-It-ai)。

NeMo护栏旨在提供一个灵活的工具包,可以将所有这些互补的方法集成到一个有凝聚力的LLM护栏层中。例如,该工具包提供了与ActiveFence、AlignScore和LangChain链的开箱即用集成。

据我们所知,NeMo Guardrails是唯一一个同时为用户和LLM之间的对话建模提供解决方案的护栏工具包。这一方面使得能够以精确的方式引导对话框。另一方面,它允许对何时应该使用某些护栏进行细粒度控制,例如,仅对某些类型的问题使用事实核查。

- 登录 发表评论

- 40 次浏览