category

随着企业利用生成性人工智能的力量重塑客户体验并自动化复杂的工作流程,人工智能代理继续获得动力。我们看到亚马逊基岩代理应用于投资研究、保险索赔处理、根本原因分析、广告活动等等。代理使用基础模型(FM)的推理能力将用户请求的任务分解为多个步骤。他们使用开发人员提供的说明来创建编排计划,并通过安全地调用公司API和使用检索增强生成(RAG)访问知识库来执行该计划,以准确处理用户的请求。

尽管组织看到了将代理定义、配置和测试为托管资源的好处,但我们越来越看到需要一种额外的、更动态的方式来调用代理。组织需要能够动态调整的解决方案,无论是测试新方法、应对不断变化的业务规则,还是为不同的客户定制解决方案。这就是亚马逊基岩代理中新的内联代理能力变得具有变革性的地方。它允许您在运行时通过更改代理的指令、工具、护栏、知识库、提示甚至它使用的FM来动态调整代理的行为,而无需重新部署您的应用程序。

在这篇文章中,我们将探讨如何使用Amazon Bedrock内联代理构建应用程序,演示单个AI助手如何根据用户角色动态调整其功能。

亚马逊基岩代理中的内联代理

内联代理实现的这种运行时灵活性开辟了强大的新可能性,例如:

- 快速原型设计——内联代理最大限度地减少了传统上代理配置更改所需的耗时的创建/更新/准备周期。开发人员可以立即测试模型、工具和知识库的不同组合,从而大大加快开发过程。

- A/B测试和实验——数据科学团队可以系统地评估不同的模型工具组合,衡量性能指标,并分析受控环境中的响应模式。这种经验方法可以在生产部署之前对配置进行定量比较。

- 基于订阅的个性化——软件公司可以根据每个客户的订阅级别调整功能,为高级用户提供更高级的工具。

- 基于角色的数据源集成——机构可以根据用户的个人资料调整内容的复杂性和基调,通过动态更改与代理相关的知识库来提供适合角色的解释和资源。

- 动态工具选择——开发人员可以创建具有数百个API的应用程序,并通过动态选择一小部分API供代理考虑给定请求,快速准确地执行任务。这对于需要多租户扩展的大型软件即服务(SaaS)平台特别有用。

内联代理扩展了您使用Amazon Bedrock代理构建和部署代理解决方案的选项。对于需要具有预定和测试配置(特定模型、指令、工具等)的托管和版本化代理资源的工作负载,开发人员可以继续在使用CreateAgent创建的资源上使用InvokeAgent。对于每个代理调用都需要动态更改运行时行为的工作负载,可以使用新的InvokeInlineAgent API。无论采用哪种方法,您的代理都将是安全且可扩展的,具有可配置的护栏、一组灵活的模型推理选项、对知识库的本地访问、代码解释、会话内存等。

解决方案概述

我们的人力资源助理示例展示了如何使用Amazon Bedrock Agents中新的内联代理功能构建一个适应不同用户角色的单一人工智能助理。当用户与助手交互时,助手会根据用户的角色及其特定选择动态配置代理功能(如模型、指令、知识库、动作组和护栏)。这种方法创建了一个灵活的系统,可以实时调整其功能,使其比为每个用户角色或工具组合创建单独的代理更高效。这个HR助手示例的完整代码可以在我们的GitHub仓库中找到。

这种动态工具选择可实现个性化体验。当员工在没有直接下属的情况下登录时,他们会看到一组根据其角色可以访问的工具。他们可以从申请休假时间、使用知识库检查公司政策、使用代码解释器进行数据分析或提交费用报告等选项中进行选择。然后,内联代理助手仅配置了这些选定的工具,使其能够协助员工完成他们选择的任务。在一个真实的例子中,用户不需要做出选择,因为应用程序会做出这个决定,并在运行时自动配置代理调用。我们在这个应用程序中明确了这一点,以便您可以证明其影响。

同样,当管理员登录到同一系统时,他们会看到一组反映其额外权限的扩展工具。除了员工级工具外,管理者还可以访问运行绩效评估等功能。他们可以选择要在当前会话中使用的工具,并立即用他们的选择配置内联代理。

知识库的包含也会根据用户的角色进行调整。员工和经理可以看到不同级别的公司政策信息,经理可以额外访问绩效评估和薪酬细节等机密数据。对于这个演示,我们实现了元数据过滤,根据用户的访问级别仅检索适当级别的文档,进一步提高了效率和安全性。

让我们看看界面如何适应不同的用户角色。

员工视图提供对基本人力资源功能的访问,如休假请求、费用提交和公司政策查找。用户可以选择在当前会话中使用这些工具中的哪一个。

The manager view extends these options to include supervisory functions like compensation management, demonstrating how the inline agent can be configured with a broader set of tools based on user permissions.

The manager view extends these capabilities to include supervisory functions like compensation management, demonstrating how the inline agent dynamically adjusts its available tools based on user permissions. Without inline agents, we would need to build and maintain two separate agents.

As shown in the preceding screenshots, the same HR assistant offers different tool selections based on the user’s role. An employee sees options like Knowledge Base, Apply Vacation Tool, and Submit Expense, whereas a manager has additional options like Performance Evaluation. Users can select which tools they want to add to the agent for their current interaction.

This flexibility allows for quick adaptation to user needs and preferences. For instance, if the company introduces a new policy for creating business travel requests, the tool catalog can be quickly updated to include a Create Business Travel Reservation tool. Employees can then choose to add this new tool to their agent configuration when they need to plan a business trip, or the application could automatically do so based on their role.

With Amazon Bedrock inline agents, you can create a catalog of actions that is dynamically selected by the application or by users of the application. This increases the level of flexibility and adaptability of your solutions, making them a perfect fit for navigating the complex, ever-changing landscape of modern business operations. Users have more control over their AI assistant’s capabilities, and the system remains efficient by only loading the necessary tools for each interaction.

Technical foundation: Dynamic configuration and action selection

Inline agents allow dynamic configuration at runtime, enabling a single agent to effectively perform the work of many. By specifying action groups and modifying instructions on the fly, even within the same session, you can create versatile AI applications that adapt to various scenarios without multiple agent deployments.

The following are key points about inline agents:

- Runtime configuration – Change the agent’s configuration, including its FM, at runtime. This enables rapid experimentation and adaptation without redeploying the application, reducing development cycles.

- Governance at tool level – Apply governance and access control at the tool level. With agents changing dynamically at runtime, tool-level governance helps maintain security and compliance regardless of the agent’s configuration.

- Agent efficiency – Provide only necessary tools and instructions at runtime to reduce token usage and improve the agent accuracy. With fewer tools to choose from, it’s less complicated for the agent to select the right one, reducing hallucinations in the tool selection process. This approach can also lead to lower costs and improved latency compared to static agents because removing unnecessary tools, knowledge bases, and instructions reduces the number of input and output tokens being processed by the agent’s large language model (LLM).

- Flexible action catalog – Create reusable actions for dynamic selection based on specific needs. This modular approach simplifies maintenance, updates, and scalability of your AI applications.

The following are examples of reusable actions:

- Enterprise system integration – Connect with systems like Salesforce, GitHub, or databases

- Utility tools – Perform common tasks such as sending emails or managing calendars

- Team-specific API access – Interact with specialized internal tools and services

- Data processing – Analyze text, structured data, or other information

- External services – Fetch weather updates, stock prices, or perform web searches

- Specialized ML models – Use specific machine learning (ML) models for targeted tasks

When using inline agents, you configure parameters for the following:

- Contextual tool selection based on user intent or conversation flow

- Adaptation to different user roles and permissions

- Switching between communication styles or personas

- Model selection based on task complexity

The inline agent uses the configuration you provide at runtime, allowing for highly flexible AI assistants that efficiently handle various tasks across different business contexts.

Building an HR assistant using inline agents

Let’s look at how we built our HR Assistant using Amazon Bedrock inline agents:

- Create a tool catalog – We developed a demo catalog of HR-related tools, including:

- Knowledge Base – Using Amazon Bedrock Knowledge Bases for accessing company policies and guidelines based on the role of the application user. In order to filter the knowledge base content based on the user’s role, you also need to provide a metadata file specifying the type of employee’s roles that can access each file

- Apply Vacation – For requesting and tracking time off.

- Expense Report – For submitting and managing expense reports.

- Code Interpreter – For performing calculations and data analysis.

- Compensation Management – for conducting and reviewing employee compensation assessments (manager only access).

- Set conversation tone – We defined multiple conversation tones to suit different interaction styles:

- Professional – For formal, business-like interactions.

- Casual – For friendly, everyday support.

- Enthusiastic – For upbeat, encouraging assistance.

- Implement access control – We implemented role-based access control. The application backend checks the user’s role (employee or manager) and provides access to appropriate tools and information and passes this information to the inline agent. The role information is also used to configure metadata filtering in the knowledge bases to generate relevant responses. The system allows for dynamic tool use at runtime. Users can switch personas or add and remove tools during their session, allowing the agent to adapt to different conversation needs in real time.

- Integrate the agent with other services and tools – We connected the inline agent to:

- Amazon Bedrock Knowledge Bases for company policies, with metadata filtering for role-based access.

- AWS Lambda functions for executing specific actions (such as submitting vacation requests or expense reports).

- A code interpreter tool for performing calculations and data analysis.

- Create the UI – We created a Flask-based UI that performs the following actions:

- Displays available tools based on the user’s role.

- Allows users to select different personas.

- Provides a chat window for interacting with the HR assistant.

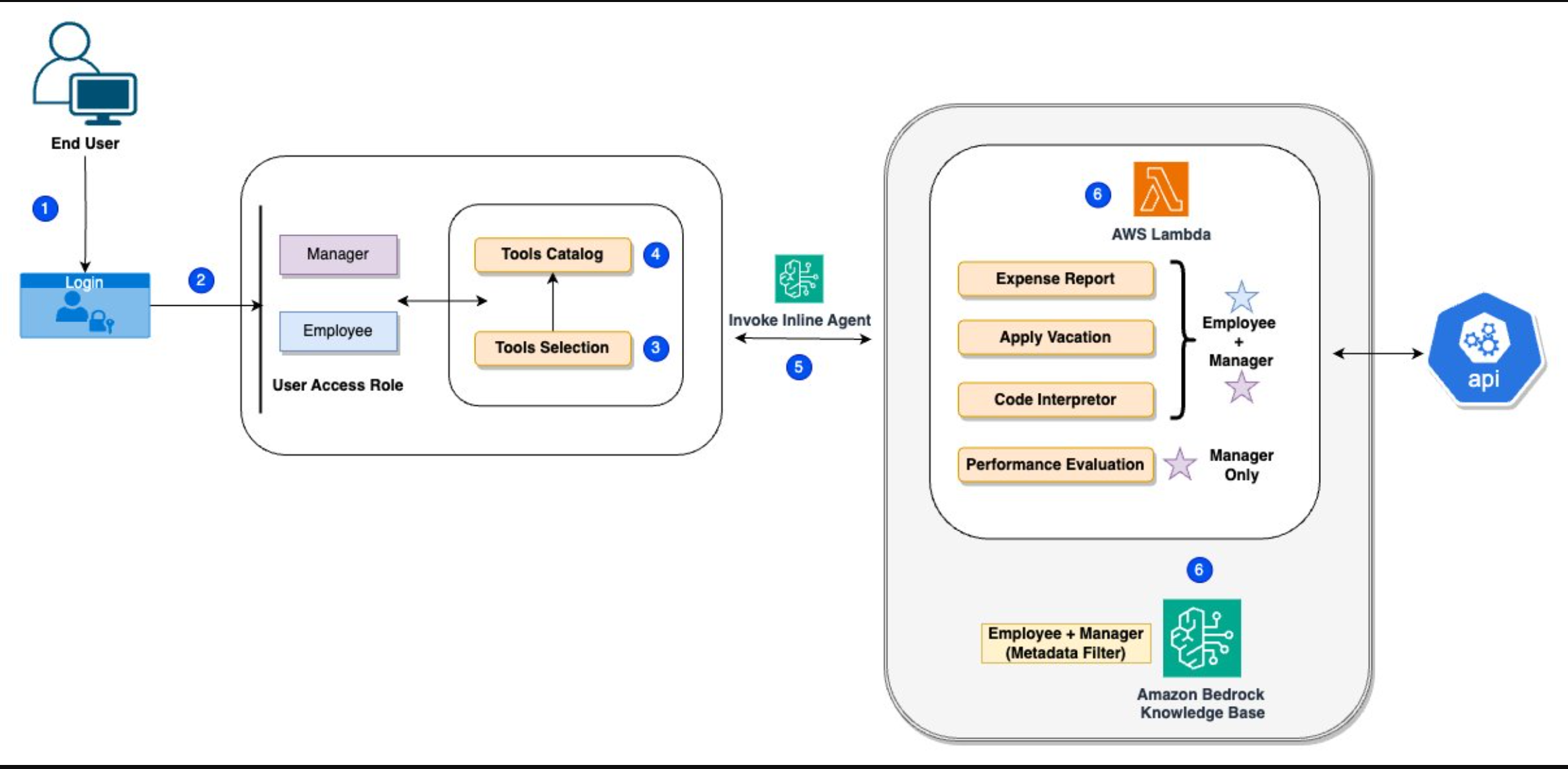

To understand how this dynamic role-based functionality works under the hood, let’s examine the following system architecture diagram.

如前面的架构图所示,系统的工作原理如下:

- 最终用户登录后被标识为经理或员工。

- 用户选择他们有权访问的工具,并向人力资源助理提出请求。

- 代理分解问题,并使用可用的工具分步骤解决查询,其中可能包括:

- 亚马逊基岩知识库(具有基于角色访问的元数据过滤功能)。

- Lambda函数用于特定操作。

- 用于计算的代码解释器工具。

- 薪酬工具(仅供经理提交基本加薪请求)。

- 该应用程序使用Amazon Bedrock内联代理根据用户的角色和请求动态传递适当的工具。

- 代理使用所选工具处理请求并向用户提供响应。

这种方法提供了一种灵活、可扩展的解决方案,可以快速适应不同的用户角色和不断变化的业务需求。

结论

在这篇文章中,我们介绍了Amazon Bedrock内联代理功能,并强调了它在人力资源用例中的应用。我们根据用户的角色和权限动态选择工具,调整指令以设置对话基调,并在运行时选择不同的模型。使用内联代理,您可以改变构建和部署AI助手的方式。通过在运行时动态调整工具、指令和模型,您可以:

- 为不同的用户角色创建个性化体验

- 通过将模型功能与任务复杂性相匹配来优化成本

- 简化开发和维护

- 无需管理多个代理配置即可高效扩展

对于需要高度动态行为的组织,无论您是人工智能初创公司、SaaS提供商还是企业解决方案团队,内联代理都提供了一种可扩展的方法来构建随您需求增长的智能助理。要开始使用,请探索我们的GitHub仓库和HR助手演示应用程序,其中演示了关键的实现模式和最佳实践。

要了解更多关于如何在代理之旅中取得最大成功的信息,请阅读我们由两部分组成的博客系列:

- Best practices for building robust generative AI applications with Amazon Bedrock Agents – Part 1

- Best practices for building robust generative AI applications with Amazon Bedrock Agents – Part 2

To get started with Amazon Bedrock Agents, check out the following GitHub repository with example code.

- 登录 发表评论

- 23 次浏览

最新内容

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago