category

语音AI正在改变我们使用技术的方式,允许更自然和直观的对话。与此同时,先进的人工智能代理现在可以理解复杂的问题,并代表我们自主行动。

在本系列的第1部分中,您学习了如何使用Amazon Bedrock和Pipecat的组合,Pipecat是一个用于语音和多模式会话人工智能代理的开源框架,用于构建具有类人会话人工智能的应用程序。您学习了语音代理的常见用例和级联模型方法,在这种方法中,您编排了几个组件来构建您的语音人工智能代理。

在这篇文章(第2部分)中,您将探索如何使用语音到语音基础模型Amazon Nova Sonic,以及使用统一模型的好处。

架构:使用Amazon Nova Sonic语音对语音

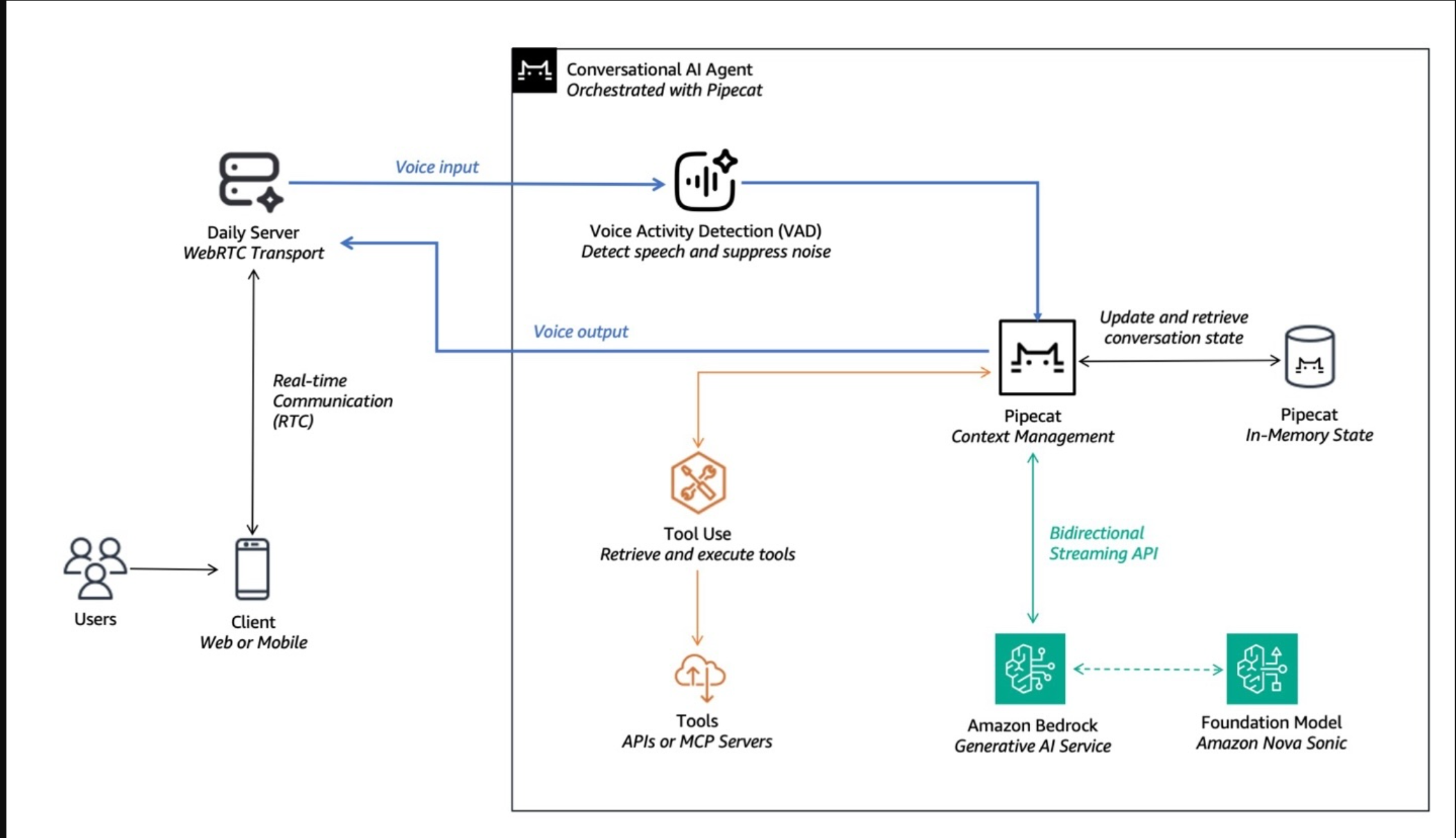

Amazon Nova Sonic是一款语音到语音基础模型,可提供实时、类人语音对话,具有行业领先的性价比和低延迟。虽然第1部分中概述的级联模型方法是灵活和模块化的,但它需要自动语音识别(ASR)、自然语言处理(NLU)和文本到语音(TTS)模型的编排。对于会话用例,这可能会引入延迟,导致语调和韵律的丧失。Nova Sonic将这些组件组合成一个统一的模型,通过单次前向传递实时处理音频,在简化开发的同时减少延迟。

通过统一这些功能,该模型可以根据输入的声学特性和会话上下文动态调整语音响应,从而创建更流畅、更符合上下文的对话。该系统能够识别对话中的微妙之处,如自然停顿、犹豫和轮流提示,使其能够在适当的时刻做出回应,并无缝管理对话中的中断。Amazon Nova Sonic还支持工具使用和代理RAG,亚马逊基岩知识库使您的语音代理能够检索信息。请参阅下图以了解端到端流程。

The choice between the two approaches depends on your use case. While the capabilities of Amazon Nova Sonic are state-of-the-art, the cascaded models approach outlined in Part 1 might be suitable if you require additional flexibility or modularity for advanced use cases.

AWS collaboration with Pipecat

To achieve a seamless integration, AWS collaborated with the Pipecat team to support Amazon Nova Sonic in version v0.0.67, making it straightforward to integrate state-of-the-art speech capabilities into your applications.

Kwindla Hultman Kramer, Chief Executive Officer at Daily.co and Creator of Pipecat, shares his perspective on this collaboration:

“Amazon’s new Nova Sonic speech-to-speech model is a leap forward for real-time voice AI. The bidirectional streaming API, natural-sounding voices, and robust tool-calling capabilities

open up exciting new possibilities for developers. Integrating Nova Sonic with Pipecat means we can build conversational agents that not only understand and respond in real time, but can

also take meaningful actions; like scheduling appointments or fetching information-directly through natural conversation. This is the kind of technology that truly transforms how people interact

with software, making voice interfaces faster, more human, and genuinely useful in everyday workflows.”

“Looking forward, we’re thrilled to collaborate with AWS on a roadmap that helps customers reimagine their contact centers with integration to Amazon Connect and harness the power

of multi-agent workflows through the Strands agentic framework. Together, we’re enabling organizations to deliver more intelligent, efficient, and personalized customer experiences—whether

it’s through real-time contact center transformation or orchestrating sophisticated agentic workflows across industries.”

Getting started with Amazon Nova Sonic and Pipecat

To guide your implementation, we provide a comprehensive code example that demonstrates the basic functionality. This example shows how to build a complete voice AI agent with Amazon Nova Sonic and Pipecat.

Prerequisites

Before using the provided code examples with Amazon Nova Sonic, make sure that you have the following:

- Python 3.12+

- An AWS account with appropriate AWS Identity and Access Management (IAM) permissions for Amazon Bedrock, Amazon Transcribe, and Amazon Polly

- Access to Amazon Nova Sonic on Amazon Bedrock

- Access to an API key for Daily

- A modern web browser (such as Google Chrome or Mozilla Firefox) with WebRTC support

Implementation steps

After you complete the prerequisites, you can start setting up your sample voice agent:

- Clone the repository:

- Set up a virtual environment:

- Create a

.envfile with your credentials:

- Start the server:

- Connect using a browser at

http://localhost:7860and grant microphone access. - Start the conversation with your AI voice agent.

Customize your voice AI agent

To customize your voice AI agent, start by:

- Modifying

bot.pyto change conversation logic. - Adjusting model selection in

bot.pyfor your latency and quality needs.

To learn more, see the README of our code sample on Github.

Clean up

The preceding instructions are for setting up the application in your local environment. The local application will uses AWS services and Daily through IAM and API credentials. For security and to avoid unanticipated costs, when you’re finished, delete these credentials so that they can no longer be accessed.

Amazon Nova Sonic and Pipecat in action

The demo showcases a scenario for an intelligent healthcare assistant. The demo was presented at the keynote in AWS Summit Sydney 2025 by Rada Stanic, Chief Technologist and Melanie Li, Senior Specialist Solutions Architect – Generative AI.

The demo showcases a simple fun facts voice agent in a local environment using SmallWebRTCTransport. As the user speaks, the voice agent provides transcription in real-time as displayed in the terminal.

Enhancing agentic capabilities with Strands Agents

A practical way to boost agentic capability and understanding is to implement a general tool call that delegates tool selection to an external agent such as a Strands Agent. The delegated Strands Agent can then reason or think about your complex query, perform multi-step tasks with tool calls, and return a summarized response.

To illustrate, let’s review a simple example. If the user asks a question like: “What is the weather like near the Seattle Aquarium?”, the voice agent can delegate to a Strands agent through a general tool call such as handle_query.

The Strands agent will handle the query and think about the task, for example:

The Strands Agent will then execute the search_places tool call, a subsequent get_weather tool call, and return a response back to the parent agent as part of the handle_query tool call. This is also known as the agent as tools pattern.

To learn more, see the example in our hands-on workshop.

结论

通过Pipecat等开源框架和Amazon Bedrock上强大的基础模型的结合,构建智能AI语音代理比以往任何时候都更容易实现。

在本系列中,您学习了构建AI语音代理的两种常见方法。在第1部分中,您学习了级联模型方法;深入会话式人工智能系统的每个组件。在第2部分中,您了解了如何使用语音到语音基础模型Amazon Nova Sonic简化实现并将这些组件统一到单个模型架构中。展望未来,请继续关注多模态基础模型的激动人心的发展,包括即将推出的Nova any-to-any模型——这些创新将不断改进您的语音AI应用程序。

Resources

To learn more about voice AI agents, see the following resources:

- Explore our code sample on Github

- Try our hands-on-workshop: Building intelligent voice AI agents with Amazon Nova Sonic, Amazon Bedrock and Pipecat

To get started with your own voice AI project, contact your AWS account team to explore an engagement with AWS Generative AI Innovation Center (GAIIC).

- 登录 发表评论

- 9 次浏览

最新内容

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago

- 1 week 6 days ago