category

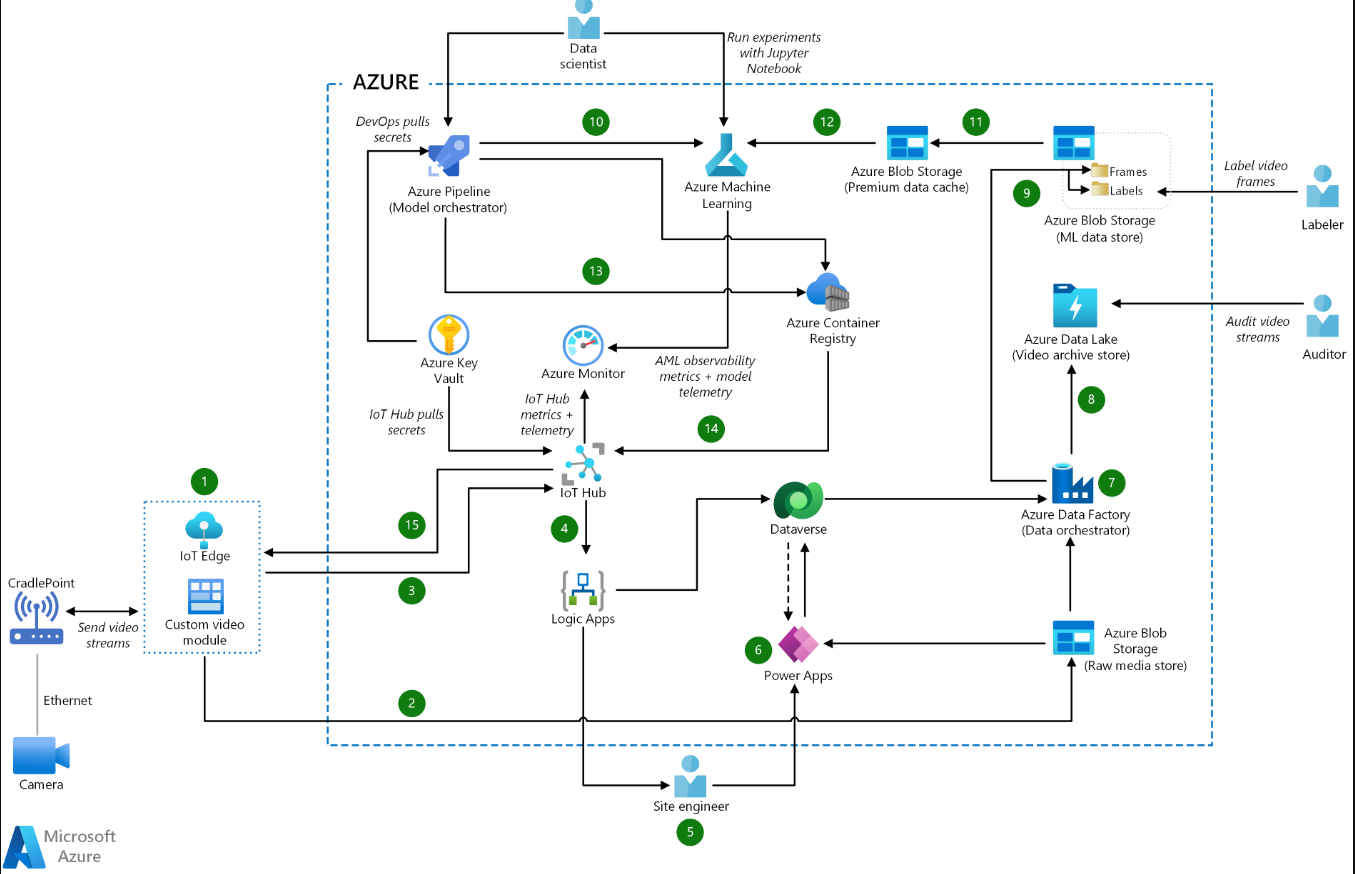

This example architecture shows an end-to-end approach to internet-of-things (IoT) computer vision in manufacturing.

Architecture

Download a Visio file of this architecture.

Dataflow

- The IoT Edge custom module captures the live video stream, breaks it down into frames, and performs inference on the image data to determine if an incident has occurred.

- The custom module also uses Azure storage SDK methods or blob API to upload the raw video files to Azure Storage, which acts as a raw media store.

- The custom module sends the inferencing results and metadata to Azure IoT Hub, which acts as a central message hub for communications in both directions.

- Azure Logic Apps monitors IoT Hub for messages about incident events. Logic Apps routes inferencing results and metadata to Microsoft Dataverse for storage.

- When an incident occurs, Logic Apps sends SMS and e-mail notifications to the site engineer. The site engineer uses a mobile app based on Power Apps to acknowledge and resolve the incident.

- Power Apps pulls inferencing results and metadata from Dataverse and raw video files from Blob Storage to display relevant information about the incident. Power Apps updates Dataverse with the incident resolution that the site engineer provided. This step acts as human-in-the-loop validation for model retraining purposes.

- Azure Data Factory is the data orchestrator that fetches raw video files from the raw media store, and gets the corresponding inferencing results and metadata from Dataverse.

- Data Factory stores the raw video files, plus the metadata, in Azure Data Lake, which serves as a video archive for auditing purposes.

- Data Factory breaks raw video files into frames, converts the inferencing results into labels, and uploads the data into Blob Storage, which acts as the ML data store.

- Changes to the model code automatically trigger the Azure Pipelines model orchestrator pipeline, which operators can also trigger manually. Code changes also start the ML model training and validation process in Azure Machine Learning.

- Azure Machine Learning starts training the model by validating the data from the ML data store and copying the required datasets to Azure Premium Blob Storage. This performance tier provides a data cache for faster model training.

- Azure Machine Learning uses the dataset in the Premium data cache to train the model, validate the trained model's performance, score it against the newly trained model, and register the model into the Azure Machine Learning registry.

- The Azure Pipelines model orchestrator reviews the performance of the newly trained ML model and determines if it's better than previous models. If the new model performs better, the pipeline downloads the model from Azure Machine Learning and builds a new version of the ML inferencing module to publish in Azure Container Registry.

- When a new ML inferencing module is ready, Azure Pipelines deploys the module container from Container Registry to the IoT Edge module in IoT Hub.

- IoT Hub updates the IoT Edge device with the new ML inferencing module.

Components

- Azure IoT Edge service analyzes device data locally to send less data to the cloud, react to events quickly, and operate in low-connectivity conditions. An IoT Edge ML module can extract actionable insights from streaming video data.

- Azure IoT Hub is a managed service that enables reliable and secure bidirectional communications between millions of IoT devices and a cloud-based back end. IoT Hub provides per-device authentication, message routing, integration with other Azure services, and management features to control and configure IoT devices.

- Azure Logic Apps is a serverless cloud service for creating and running automated workflows that integrate apps, data, services, and systems. Developers can use a visual designer to schedule and orchestrate common task workflows. Logic Apps has connectors for many popular cloud services, on-premises products, and other software as a service (SaaS) applications. In this solution, Logic Apps runs the automated notification workflow that sends SMS and email alerts to site engineers.

- Power Apps is a data platform and a suite of apps, services, and connectors. It serves as a rapid application development environment. The underlying data platform is Microsoft Dataverse.

- Dataverse is a cloud-based storage platform for Power Apps. Dataverse supports human-in-the-loop notifications and stores metadata associated with the MLOps data pipeline.

- Azure Blob Storage is scalable and secure object storage for unstructured data. You can use it for archives, data lakes, high-performance computing, machine learning, and cloud-native workloads. In this solution, Blob storage provides a local data store for the ML data store and a Premium data cache for training the ML model. The premium tier of Blob Storage is for workloads that require fast response times and high transaction rates, like the human-in-the-loop video labeling in this example.

- Data Lake Storage is a massively scalable and secure storage service for high-performance analytics workloads. The data typically comes from multiple heterogeneous sources and can be structured, semi-structured, or unstructured. Azure Data Lake Storage Gen2 combines Azure Data Lake Storage Gen1 capabilities with Blob Storage, and provides file system semantics, file-level security, and scale. It also offers the tiered storage, high availability, and disaster recovery capabilities of Blob Storage. In this solution, Data Lake Storage provides the archival video store for the raw video files and metadata.

- Azure Data Factory is a hybrid, fully managed, serverless solution for data integration and transformation workflows. It provides a code-free UI and an easy-to-use monitoring panel. Azure Data Factory uses pipelines for data movement. Mapping data flows perform various transformation tasks such as extract, transform, and load (ETL) and extract, load, and transform (ELT). In this example, Data Factory orchestrates the data in an ETL pipeline to the inferencing data, which it stores for retraining purposes.

- Azure Machine Learning is an enterprise-grade machine learning service for building and deploying models quickly. It provides users at all skill levels with a low-code designer, automated machine learning, and a hosted Jupyter notebook environment that supports various IDEs.

- Azure Pipelines, part of Azure DevOps team-based developer services, creates continuous integration (CI) and continuous deployment (CD) pipelines. In this example, the Azure Pipelines model orchestrator validates ML code, triggers serverless task pipelines, compares ML models, and builds the inferencing container.

- Container Registry creates and manages the Docker registry to build, store, and manage Docker container images, including containerized ML models.

- Azure Monitor collects telemetry from Azure resources, so teams can proactively identify problems and maximize performance and reliability.

Alternatives

Instead of using the data pipeline to break down the video stream into image frames, you can deploy an Azure Blob Storage module onto the IoT Edge device. The inferencing module then uploads the inferenced image frames to the storage module on the edge device. The storage module determines when to upload the frames directly to the ML data store. The advantage of this approach is that it removes a step from the data pipeline. The tradeoff is that the edge device is tightly coupled to Azure Blob Storage.

For model orchestration, you can use either Azure Pipelines or Azure Data Factory.

- The Azure Pipelines advantage is its close ties with the ML model code. You can trigger the training pipeline easily with code changes through CI/CD.

- The benefit of Data Factory is that each pipeline can provision the required compute resources. Data Factory doesn't hold on to the Azure Pipelines agents to run ML training, which could congest the normal CI/CD flow.

Scenario details

Fully automated smart factories use artificial intelligence (AI) and machine learning (ML) to analyze data, run systems, and improve processes over time.

In this example, cameras send images to an Azure IoT Edge device that runs an ML model. The model calculates inferences, and sends actionable output to the cloud for further processing. Human interventions are part of the intelligence the ML model captures. The ML process is a continuous cycle of training, testing, tuning, and validating the ML algorithms.

Potential use cases

Manufacturing processes use IoT computer vision in safety and quality assurance applications. IoT computer vision systems can:

- Help ensure compliance with manufacturing guidelines like proper labeling.

- Identify manufacturing defects like surface unevenness.

- Enhance security by monitoring building or area entrances.

- Uphold worker safety by detecting personal protective equipment (PPE) usage and other safety practices.

Considerations

These considerations implement the pillars of the Azure Well-Architected Framework, which is a set of guiding tenets that can be used to improve the quality of a workload. For more information, see Microsoft Azure Well-Architected Framework.

Availability

ML-based applications typically require one set of resources for training and another for serving. Training resources generally don't need high availability, as live production requests don't directly use these resources. Resources required for serving requests need to have high availability.

Operations

This solution is divided into three operational areas:

-

In IoT operations, an ML model on the edge device uses real-time images from connected cameras to inference video frames. The edge device also sends cached video streams to cloud storage to use for auditing and model retraining. After ML retraining, Azure IoT Hub updates the edge device with the new ML inferencing module.

-

MLOps uses DevOps practices to orchestrate model training, testing, and deployment operations. MLOps life cycle management automates the process of using ML models for complex decision-making, or productionizing the models. The key to MLOps is tight coordination among the teams that build, train, evaluate, and deploy the ML models.

-

Human-in-the-loop operations notify people to intervene at certain steps in the automation. In human-in-the-loop transactions, workers check and evaluate the results of the machine learning predictions. Human interventions become part of the intelligence the ML model captures, and help validate the model.

The following human roles are part of this solution:

-

Site engineers receive the incident notifications that Logic Apps sends, and manually validate the results or predictions of the ML model. For example, the site engineer might examine a valve that the model predicted had failed.

-

Data labelers label data sets for retraining, to complete the loop of the end-to-end solution. The data labeling process is especially important for image data, as a first step in training a reliable model through algorithms. In this example, Azure Data Factory organizes the video frames into positive and false positive groupings, which make the data labeler's work easier.

-

Data scientists use the labeled data sets to train the algorithms to make correct real-life predictions. Data scientists use MLOps with GitHub Actions or Azure Pipelines in a CI process to automatically train and validate a model. Training can be triggered manually, or automatically by checking in new training scripts or data. Data scientists work in an Azure Machine Learning workspace that can automatically register, deploy, and manage models.

-

IoT engineers use Azure Pipelines to publish IoT Edge modules in containers to Container Registry. Engineers can deploy and scale the infrastructure on demand by using a CD pipeline.

-

Safety auditors review archived video streams to detect anomalies, assess compliance, and confirm results when questions arise about a model's predictions.

In this solution, IoT Hub ingests telemetry from the cameras and sends the metrics to Azure Monitor, so site engineers can investigate and troubleshoot. Azure Machine Learning sends observability metrics and model telemetry to Azure Monitor, helping the IoT engineers and data scientists to optimize operations.

-

Performance

IoT devices have limited memory and processing power, so it's important to limit the size of the model container sent to the device. Be sure to use an IoT device that can do model inference and produce results in an acceptable amount of time.

To optimize performance for training models, this example architecture uses Azure Premium Blob Storage. This performance tier is designed for workloads that require fast response times and high transaction rates, like the human-in-the-loop video labeling scenario.

Performance considerations also apply to the data ingestion pipeline. Data Factory maximizes data movement by providing a highly performant, cost-effective solution.

Scalability

Most of the components used in this solution are managed services that automatically scale.

Scalability for the IoT application depends on IoT Hub quotas and throttling. Factors to consider include:

- The maximum daily quota of messages into IoT Hub.

- The quota of connected devices in an IoT Hub instance.

- Ingestion and processing throughput.

In ML, scalability refers to scale out clusters used to train models against large datasets. Scalability also enables the ML model to meet the demands of the applications that consume it. To meet these needs, the ML cluster must provide scale-out on CPUs and on graphics processing unit (GPU)-enabled nodes.

For general guidance on designing scalable solutions, see the performance efficiency checklist in the Azure Architecture Center.

Security

Security provides assurances against deliberate attacks and the abuse of your valuable data and systems. For more information, see Overview of the security pillar.

Access management in Dataverse and other Azure services helps ensure that only authorized users can access the environment, data, and reports. This solution uses Azure Key Vault to manage passwords and secrets. Storage is encrypted using customer-managed keys.

For general guidance on designing secure IoT solutions, see the Azure Security Documentation and the Azure IoT reference architecture.

Cost optimization

Cost optimization is about looking at ways to reduce unnecessary expenses and improve operational efficiencies. For more information, see Overview of the cost optimization pillar.

In general, use the Azure pricing calculator to estimate costs. For other considerations, see Cost optimization.

Azure Machine Learning also deploys Container Registry, Azure Storage, and Azure Key Vault services, which incur extra costs. For more information, see How Azure Machine Learning works: Architecture and concepts.

Azure Machine Learning pricing includes charges for the virtual machines (VMs) used to train the model in the cloud. For information about availability of Azure Machine Learning and VMs per Azure region, see Products available by region.

Contributors

This article is maintained by Microsoft. It was originally written by the following contributors.

Principal Author:

- Wilson Lee | Principal Software Engineer

Next steps

- IoT concepts and Azure IoT Hub

- What is Azure Logic Apps?

- What is Power Apps?

- Microsoft Power Apps documentation

- What is Microsoft Dataverse?

- Microsoft Dataverse documentation

- Introduction to Azure Data Lake Storage Gen2

- What is Azure Data Factory?

- Azure Machine Learning documentation

- What is Azure DevOps?

- What is Azure Pipelines?

- Azure Container Registry documentation

- Azure Monitor overview

- Azure IoT for safer workplaces

- Dow Chemical uses vision AI at the edge to boost employee safety and security with Azure

- Edge Object Detection GitHub sample

Related resources

- 登录 发表评论

- 9 次浏览

最新内容

- 1 month 1 week ago

- 1 month 1 week ago

- 2 months ago

- 2 months ago

- 2 months ago

- 2 months ago

- 2 months ago

- 2 months ago

- 2 months ago

- 2 months ago