category

This post covers end to end about Azure Kubernetes Service (AKS) & Azure Container Instance (ACI)

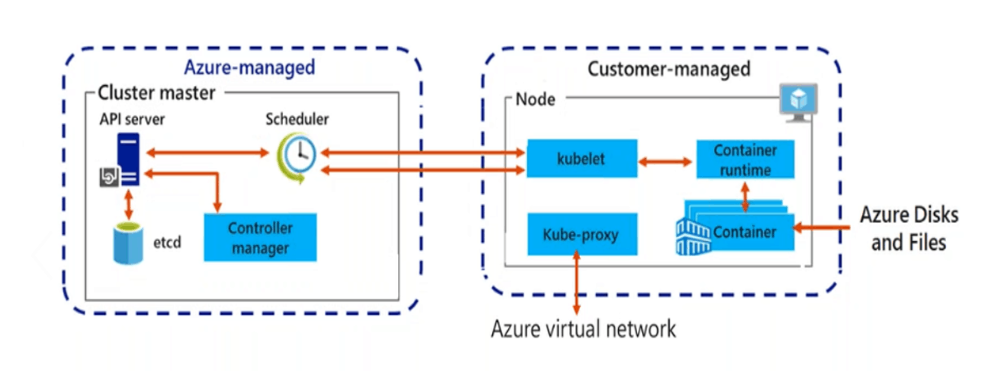

Kubernetes is an open-source platform for managing containerized workloads and services in this we need to manage master & worker. Azure provides managed Kubernetes service Azure Kubernetes Service in which azure manages the master nodes and end-user needs to manage the worker nodes.

In this video blog we will cover:

- What is Azure Container Instances (ACI)

- What Is Kubernetes?

- Azure Kubernetes Service (AKS)

- ACI vs AKS

- When to Use ACI

- When to use AKS

- Create Azure Kubernetes Cluster

- Service Types in K8S

- Azure Kubernetes Service Networking

- Azure Kubernetes Service Storage

- Azure Kubernetes Service Security

- Azure Kubernetes Service With CI/CD

What is Azure Container Instances (ACI)

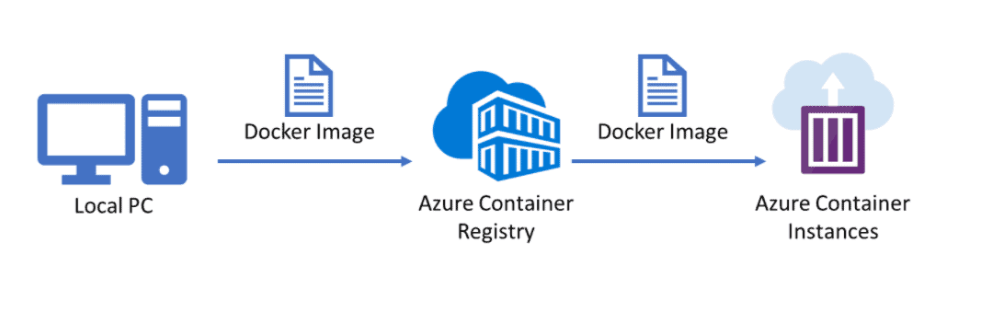

Azure Container Instances (ACI) is Microsoft PaaS (Platform as service) solution that offers the fastest and simplest way to run a container in Azure, without having to manage any underlying infrastructure. For container orchestration in Azure (build, manage, and deploy multiple containers) use Azure Kubernetes Service (AKS). You can deploy Azure Container Instances using Azure Portal, Azure CLI, Powershell, or ARM Template. Same as the docker registry we can push our images to Azure Container Registry (ACR) which is a private secure registry propose by the Azure platform.

Note: To read more about the Azure container instance (ACI), click here

What Is Kubernetes?

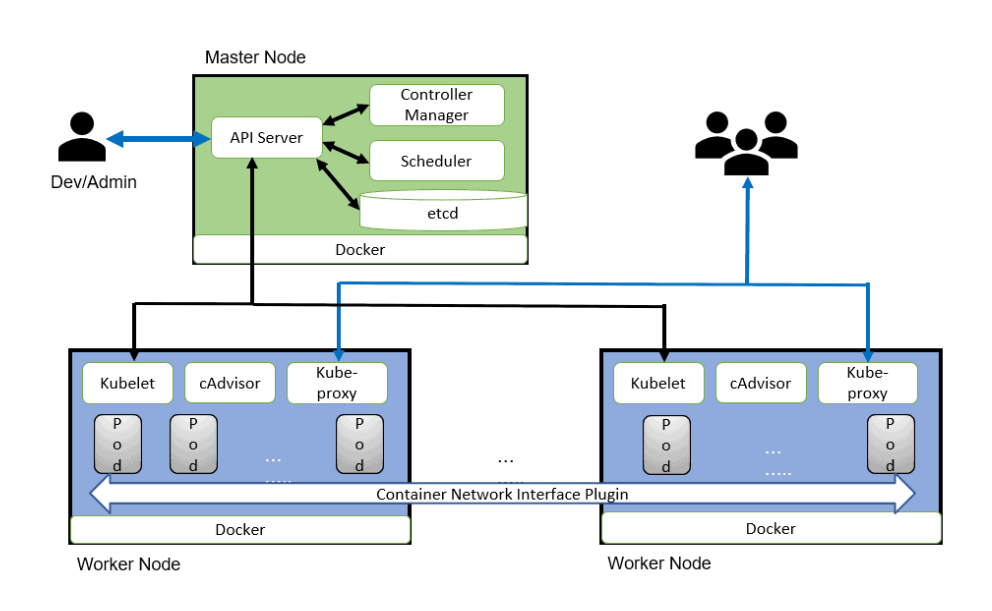

In organizations, multiple numbers of containers running on multiple hosts at a time so it is very hard to manage all the containers together we use Kubernetes. Kubernetes is an open-source platform for managing containerized workloads and services. Kubernetes takes care of scaling and failover for your application running on the container.

Note: Read More about the Kubernetes Architecture & Components in detail

Also read: our blog on Azure Availability Zones

Azure Kubernetes Service (AKS)

Azure Kubernetes Service (AKS) is a managed Kubernetes service in which the master node is managed by Azure and end-users manages worker nodes. Users can use AKS to deploy, scale, and manage Docker containers and container-based applications across a cluster of container hosts. As a managed Kubernetes service AKS is free – you only pay for the worker nodes within your clusters, not for the masters. You can create an AKS cluster in the Azure portal, with the Azure CLI, or template-driven deployment options such as Resource Manager templates and Terraform.

Note: To read about the Azure Kubernetes Service (AKS), click here.

ACI vs AKS

Pricing

ACI charges are based on the runtime of container groups, with pricing determined by the allocated vCPUs and memory resources. For instance, in the Central US region, Linux VMs cost $0.0000135 per vCPU per second and $0.0000015 per GB of RAM per second. If a container group utilizes 10 vCPUs and 100 GB of RAM, the memory resources would cost $0.009 per hour, and the vCPUs would cost $0.0081 per hour, resulting in a total of $0.0171 per hour.

On the other hand, AKS does not impose any additional charges for managing your Kubernetes environment. The billing is based on the VMs running your worker nodes, along with the associated storage and networking resources used by your clusters. The costs incurred are the same as running the equivalent VMs without AKS. To estimate expenses accurately, you need to consider the VM type, the number of required nodes, and their duration of operation. For up-to-date pricing information for Azure VMs, it is recommended to refer to Azure’s official documentation.

Scale

ACI employs container groups to facilitate scaling, where multiple containers operate on the same host and share resources, networks, and storage volumes. This concept resembles a Kubernetes pod, where containers within the group have synchronized lifecycles.

In contrast, AKS leverages the scaling functionalities provided by Kubernetes. Users have the flexibility to manually scale their AKS pods or utilize horizontal pod autoscaling (HPA), which automatically adjusts the number of pods in a deployment based on metrics like CPU utilization or other specified criteria.

Security

ACI offers the ability to utilize Azure Virtual Networks, which enable secure networking for Azure resources and on-premises workloads. By deploying container groups into Virtual Networks, ACI allows for secure communication between ACI containers and various entities, such as other container groups within the same subnet, databases located in the same Virtual Network, and on-premises resources accessed through a VPN gateway or ExpressRoute.

On the other hand, AKS provides access to the comprehensive security features of native Kubernetes, augmented by Azure capabilities like network security groups and orchestrated cluster upgrades. Keeping software components up to date is crucial for security, and AKS automatically ensures that clusters run the latest versions of operating systems and Kubernetes, including necessary security patches. Additionally, AKS prioritizes the security of sensitive credentials and the traffic within pods, ensuring secure access to these resources.

When to Use ACI

Azure Container Instances (ACI) is a suitable choice in several scenarios, including:

- Quick application development and testing: ACI allows for rapid deployment of containers without the need to manage underlying infrastructure. It is ideal for short-lived development and testing environments, enabling quick iteration and experimentation.

- Bursting workloads: ACI provides the ability to scale up or down rapidly based on workload demands. It is useful for handling peak traffic periods or sudden spikes in workload, allowing you to quickly scale your containerized applications without the need for long-term resource commitments.

- Task and batch execution: ACI is well-suited for running individual tasks or batch jobs that need to be executed without the overhead of managing a full-fledged container orchestration platform. It simplifies running one-off tasks, scheduled jobs, or data processing tasks.

- Microservices deployment: ACI can be used to deploy and manage individual microservices that require isolation and independent scaling. It allows you to run different microservices as separate container groups, providing flexibility and granular control over their resource allocation and lifecycles.

- Event-driven workloads: ACI can be integrated with various event-driven architectures and serverless computing models. It can serve as the execution environment for event-driven functions or as a component within a serverless architecture, allowing you to respond to events and triggers with container-based workloads.

Remember, ACI is a lightweight container execution environment and does not provide all the features of a full container orchestration platform like Azure Kubernetes Service (AKS). If you require advanced orchestration, scheduling, and management capabilities or have complex multi-container applications, AKS might be a more suitable choice.

When to use AKS

Azure Kubernetes Service (AKS) is an excellent choice in several scenarios, including:

- Containerized application deployment: AKS is designed for deploying and managing containerized applications at scale. If you have complex multi-container applications that require orchestration, scaling, and management capabilities, AKS provides a robust platform for running and managing these workloads.

- Production-grade workloads: AKS is well-suited for running production workloads that require high availability, scalability, and resilience. It offers features like automated scaling, load balancing, self-healing, and rolling updates, ensuring that your applications can handle production-level traffic and demands.

- Microservices architecture: AKS supports the deployment of microservices-based applications. It allows you to break down your application into smaller, decoupled services that can be independently deployed, scaled, and managed. AKS offers advanced networking capabilities, service discovery, and load balancing, enabling seamless communication and coordination between microservices.

- Continuous integration and deployment (CI/CD): AKS integrates well with popular CI/CD tools and provides a streamlined workflow for building, testing, and deploying containerized applications. It allows you to automate the deployment process, easily roll out updates, and ensure a consistent and reliable delivery pipeline.

- DevOps collaboration: AKS promotes collaboration between development and operations teams. It provides a consistent environment across development, testing, and production stages, enabling seamless collaboration and smoother application lifecycle management.

- Hybrid and multi-cloud deployments: AKS supports hybrid and multi-cloud scenarios, allowing you to run your Kubernetes workloads on both Azure and on-premises infrastructure. It offers integration with Azure Arc, enabling you to manage and govern your Kubernetes clusters across different environments from a centralized location.

Create Azure Kubernetes Cluster

Azure Kubernetes Service (AKS) is a managed Kubernetes service that lets you quickly deploy and manage master and worker nodes in clusters.

We can Deploy Azure Kubernetes cluster In Three ways:

A) Azure Portal B) Azure CLI C) Azure power shell

Note: To learn how to Deploy an Azure Kubernetes Service (AKS), Click here

Note: We will cover how to Create Azure Kubernetes cluster in our next blog.

Also Read: Our previous blog post on az 104 certification: A complete step-by-step guide.

Service Types In K8S

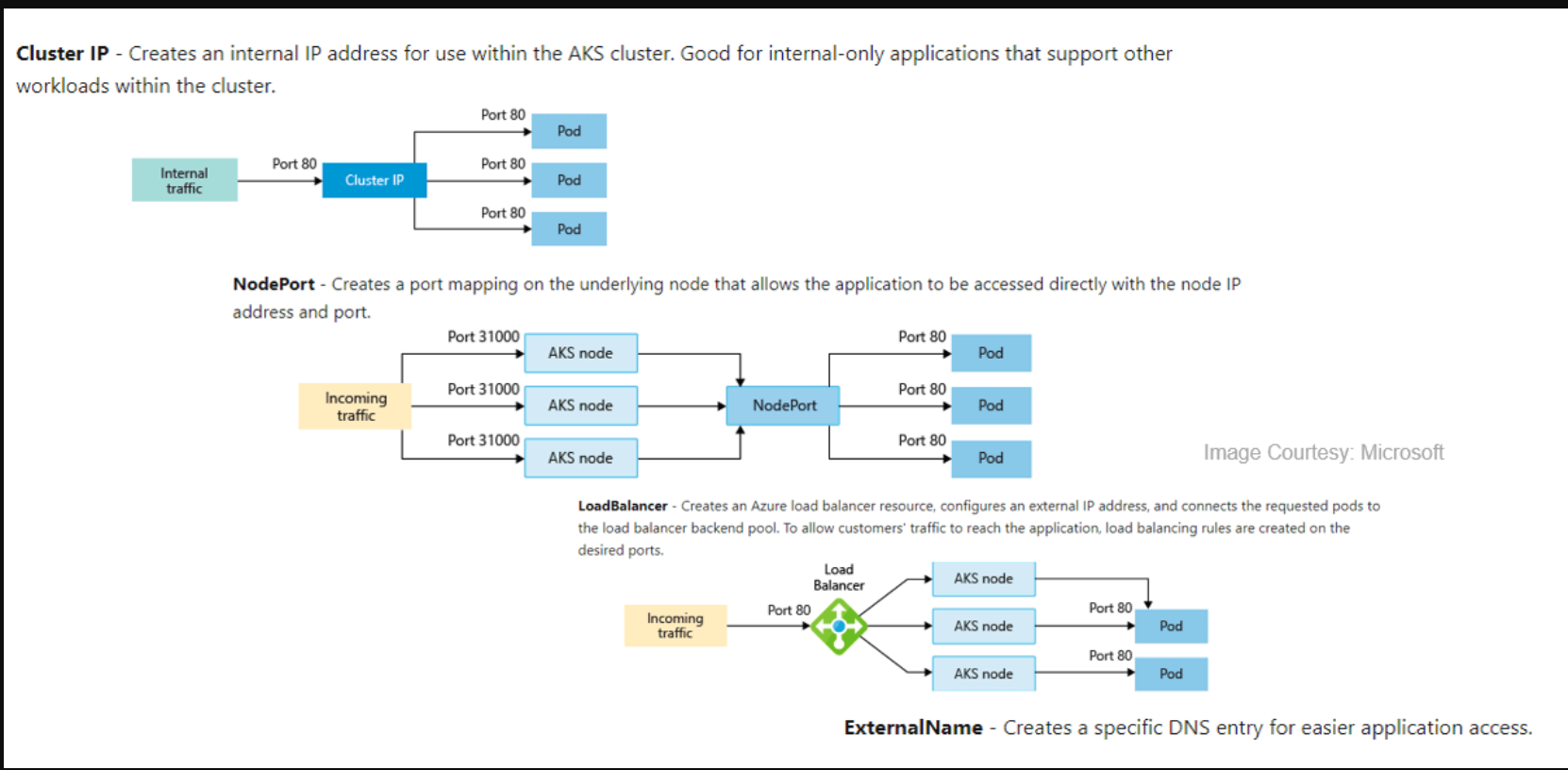

To simplify the network configuration for application workloads, Kubernetes uses Services to logically group a set of pods together and provide network connectivity.

We can Deploy Azure Kubernetes cluster In Three ways:

A) Cluster IP B) NodePort C) LoadBalancer D) ExternalName

Azure Kubernetes Service Networking

In AKS, we can deploy a cluster using the following networking models:

A) kubenet (Basic Networking)

B) CNI (Advanced Networking)

Also check: All you need to know about Kubernetes RBAC

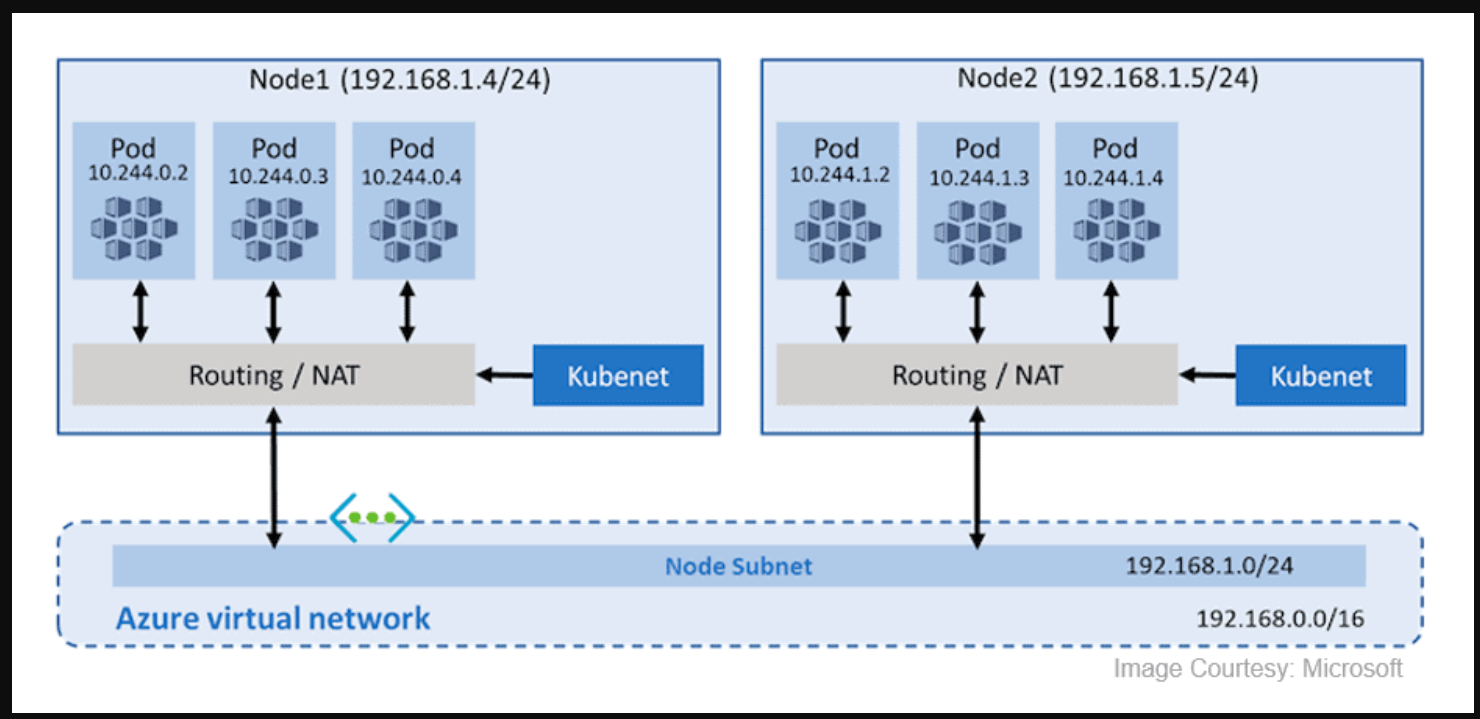

AKS kubenet (Basic Networking)

By default, Azure Kubernetes Service (AKS) clusters use kubenet, and this will create an Azure virtual network and subnet for you. Using kubenet, only the nodes receive an IP address in the virtual network subnet and pods can’t communicate directly with each other. Instead, User Defined Routing (UDR) and IP forwarding are used for connectivity between pods across nodes. In basic networking pod, IP natted inside subnet. Network address translation (NAT) is used so that the pods can reach resources on the Azure virtual network.

Check Out: What is a pod in Kubernetes. Click here

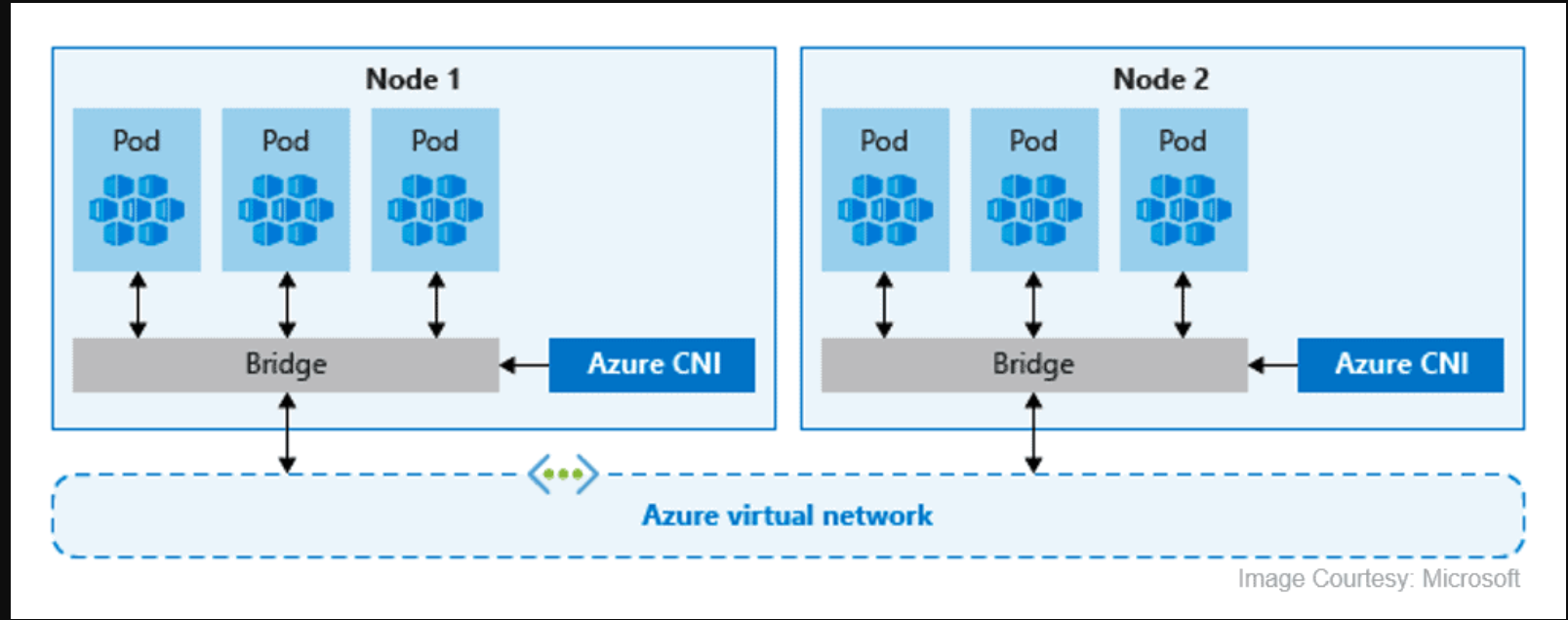

AKS CNI (Advanced Networking)

In Azure Container Networking Interface (CNI) every pod gets an IP address from the subnet and can be accessed directly via their private IP address from connected networks. These IP addresses must be unique across your network space. These IP’s must be planned in advance. Advance networking requires more planning if all IP addresses used then we need to rebuild clusters in a larger subnet as your application demands.

Note: To read more about the Networking in AKS, click here.

Also Check: Azure Application Gateway vs Load Balancer, to know the major differences between them.

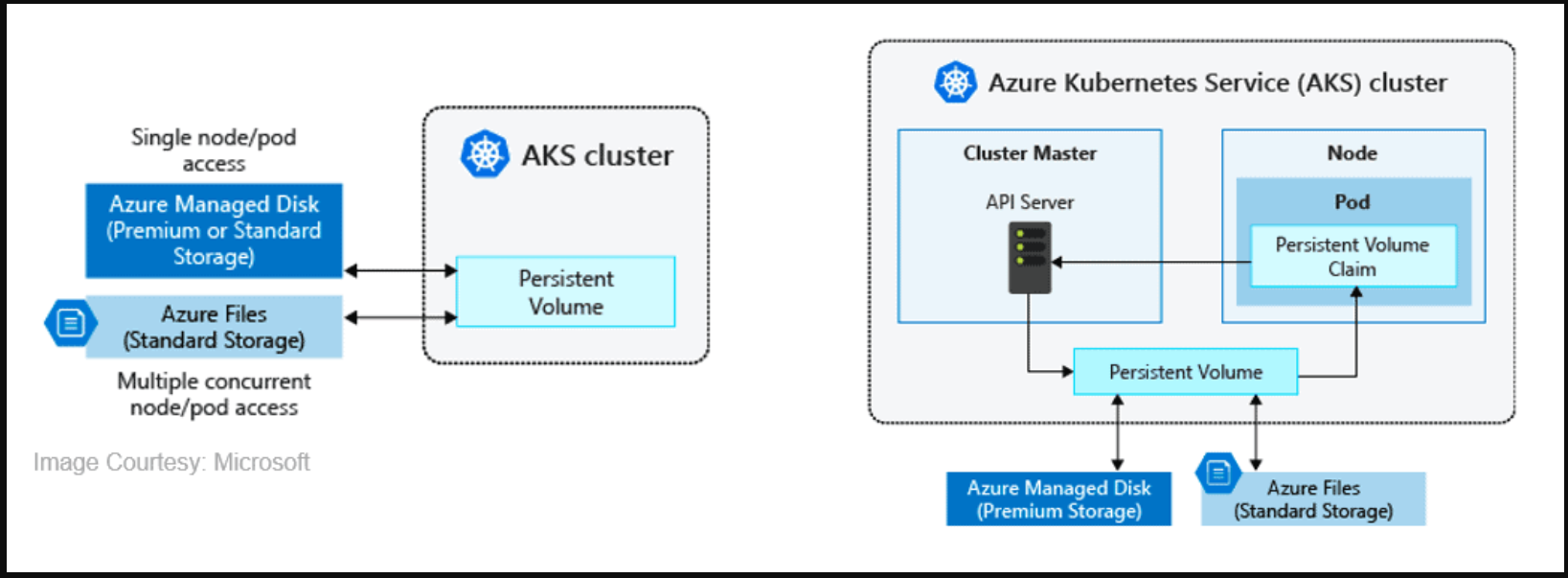

Azure Kubernetes Service Storage

In AKS, There are two types of storage options available:

A) Azure Disk

B) Azure Files

These stores are used to provide persistent storage to store data and images persistently. Azure disks can be used to create a Kubernetes DataDisk resource and mounted as ReadWrite, so the disks are only available to a single pod. we can’t share this with multiple pods. Azure Files are SMB based shared file system mounted across different machines. Using Azure files we can share data across multiple nodes and pods.

Note: To read more about the Storage Options On (AKS), click here.

Also Read: Our blog post on Aks Cluster. Click here

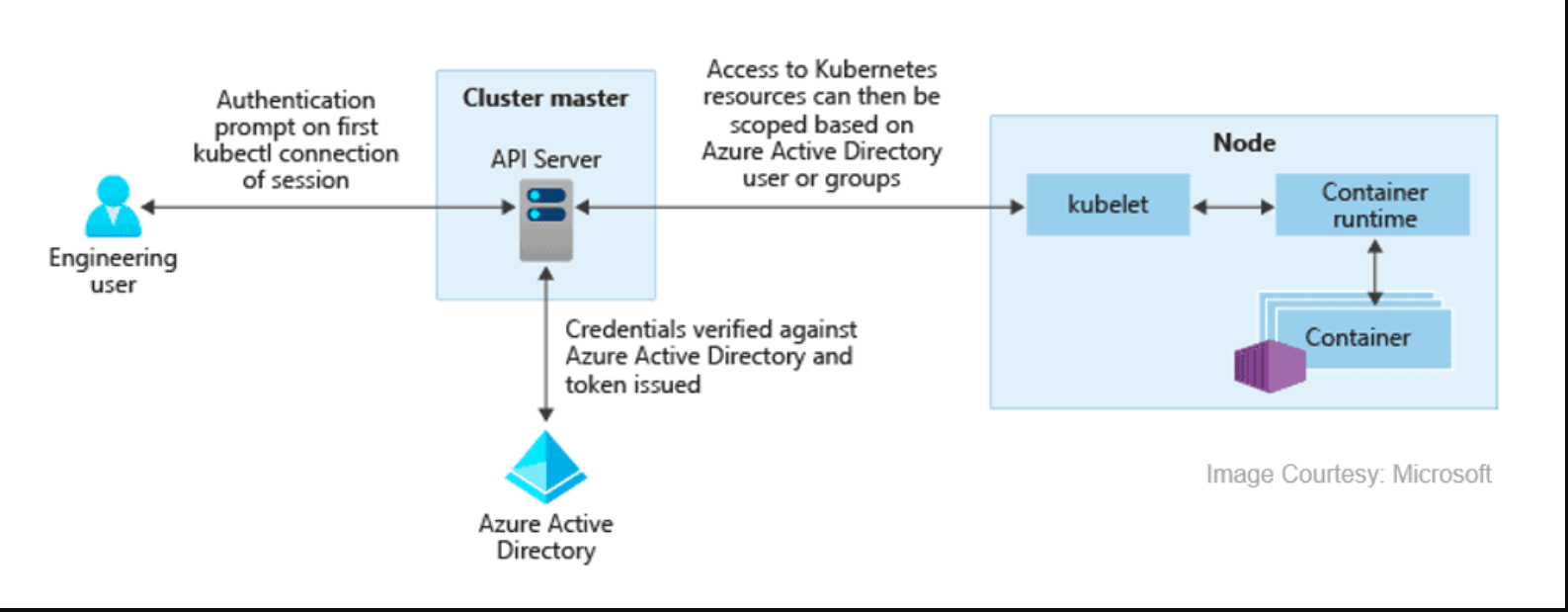

Azure Kubernetes Service Security

Azure Active Directory with AKS, We can integrate Azure Kubernetes with Azure Active Directory so the users in Azure Active Directory for user authentication. Using this user in Azure Active Directory can access the AKS cluster using an Azure AD authentication token. we can also configure Kubernetes role-based access control (RBAC) based on a user’s identity. Azure AD legacy integration can only be enabled during cluster creation.

Note: To read more about the Azure Kubernetes Service Security, click here.

Also Check: How to Create Virtual Machine in Azure. Click here

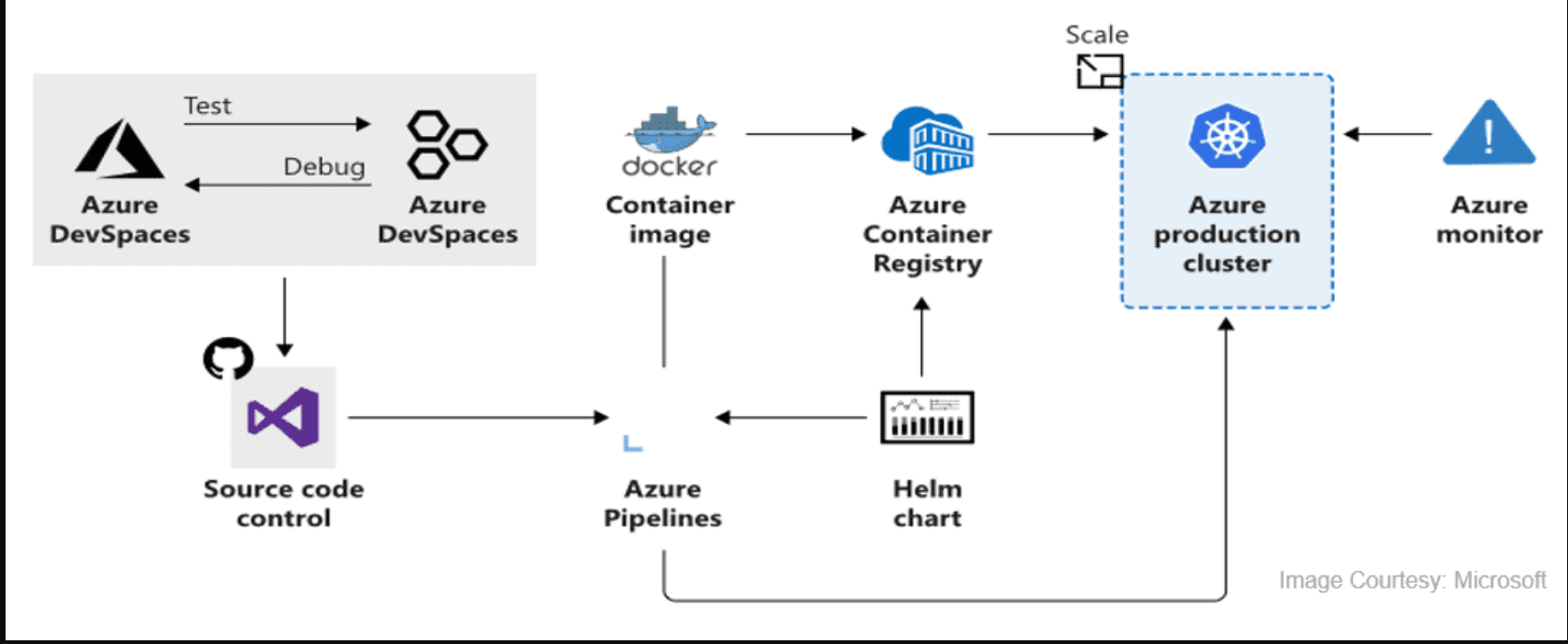

Azure Kubernetes Service With CI/CD

We can deploy AKS in CI/CD environment, using this we can continuously build and deploy applications in Azure Kubernetes Service. By deploying these using Azure Kubernetes Service (AKS), we can achieve replicable, manageable clusters of containers.

Note: Read More about the CI/CD in detail

Note: We will cover Azure Kubernetes Service with CI/CD in our upcoming blog.

Frequently Asked Questions

Related Post

- Certified Kubernetes Administrator (CKA) Certification Exam: Everything You Must Know

- Certified Kubernetes Administrator (CKA) Certification: Step By Step Activity Guides/Hands-On Lab

- Azure Kubernetes Service (AKS) & Azure Container Instances (ACI) For Beginners

- Azure Landing Zone Overview | Architecture | Accelerator

- Create FREE Microsoft Azure Trial Account

- Visit our YouTube channel on “Docker & Kubernetes”

- Top 45+ Azure Interview Questions 2023

- Top Kubernetes Interview Questions and Answers

Next Task For You

Begin your journey toward Mastering Azure Cloud and landing high-paying jobs. Just click on the register now button on the below image to register for a Free Class on Mastering Azure Cloud: How to Build In-Demand Skills and Land High-Paying Jobs. This class will help you understand better, so you can choose the right career path and get a higher paying job.

- 登录 发表评论

- 28 次浏览

最新内容

- 1 month ago

- 1 month ago

- 1 month ago

- 1 month ago

- 1 month ago

- 1 month ago

- 1 month ago

- 1 month ago

- 1 month ago

- 1 month ago